- unwind ai

- Posts

- 40 KB Conversational AI Model

40 KB Conversational AI Model

+ Drag-and-drop AI Agent Designer in Vertex AI, Open source MiniMax 2.1

Before we start, wishing you a very happy 2026 🎊 Last year was the year of AI agents, reasoning models, open-source models from China, AI browsers, and an explosion of vibe coding.

And 2026 will be even wilder. Here are a few things that you may see this year:

Vibe coding will become mainstream,

Distribution might be the only moat as AI makes code a commodity, and

Probably the first billion-dollar company run by a single person and 1000+ AI Agents.

Today’s top AI Highlights:

& so much more!

Read time: 3 mins

AI Tutorial

Google recently launched the Interactions API alongside Gemini Deep Research, an autonomous research agent that can conduct comprehensive multi-step investigations.

This is a significant shift from traditional APIs - instead of stateless request-response cycles, you get server-side state management, background execution for long-running tasks, and seamless handoffs between different models and agents.

In this tutorial, we'll build an AI Research Planner & Executor Agent that demonstrates these capabilities in action. The system uses a three-phase workflow: Gemini 3 Flash creates research plans, Deep Research Agent executes comprehensive web investigations, and Gemini 3 Pro synthesizes findings into executive reports with auto-generated infographics.

We share hands-on tutorials like this every week, designed to help you stay ahead in the world of AI. If you're serious about leveling up your AI skills and staying ahead of the curve, subscribe now and be the first to access our latest tutorials.

Latest Developments

A language model just ran on a processor from 1976. Not a stripped-down demo, not a proof-of-concept - a full conversational AI with inference, weights, and a chat interface, all packed into a 40KB binary that boots on a Z80 with 64KB of RAM.

A developer created Z80-μLM to answer a simple question: how small can you go while still being useful?

Z80-μLM is a 'conversational AI' that generates short character-by-character sequences, with quant-aware training designed specifically for 8-bit hardware constraints. Each weight is just 2 bits ({-2, -1, 0, +1}), packed four per byte. The model responds to input patterns rather than exact words through trigram hashing, where "are you a robot" and "robot are you" trigger identical representations.

Responses are deliberately terse (1-2 words max), which turns out to be a feature rather than a limitation - short outputs like "MAYBE," "WHY?," or "AM I?" can convey surprising nuance when you're forced to infer meaning from context.

Key Highlights:

Quantization-Aware Training - Training runs both float and integer versions in parallel, progressively pushing weights toward the 2-bit grid. This prevents the model from finding solutions that only work in float and collapse when quantized.

Trigram Hash Encoding - Input text hashes into 128 buckets for fuzzy matching. It handles typos naturally but loses word order—"hello there" and "there hello" look identical to the model.

Character-by-Character Generation - Outputs text one character at a time from a ~35 character set. The model tracks the last 8 characters it generated to maintain basic conversational flow.

Pre-Built Examples & Training Tools - Ships with a conversational chatbot and a 20 Questions game. Includes utilities to generate training data using Ollama or Claude API.

Try it yourself on GitHub or check out the training guide to build your own 8-bit conversational AI.

Your competitors are already automating. Here's the data.

Retail and ecommerce teams using AI for customer service are resolving 40-60% more tickets without more staff, cutting cost-per-ticket by 30%+, and handling seasonal spikes 3x faster.

But here's what separates winners from everyone else: they started with the data, not the hype.

Gladly handles the predictable volume, FAQs, routing, returns, order status, while your team focuses on customers who need a human touch. The result? Better experiences. Lower costs. Real competitive advantage. Ready to see what's possible for your business?

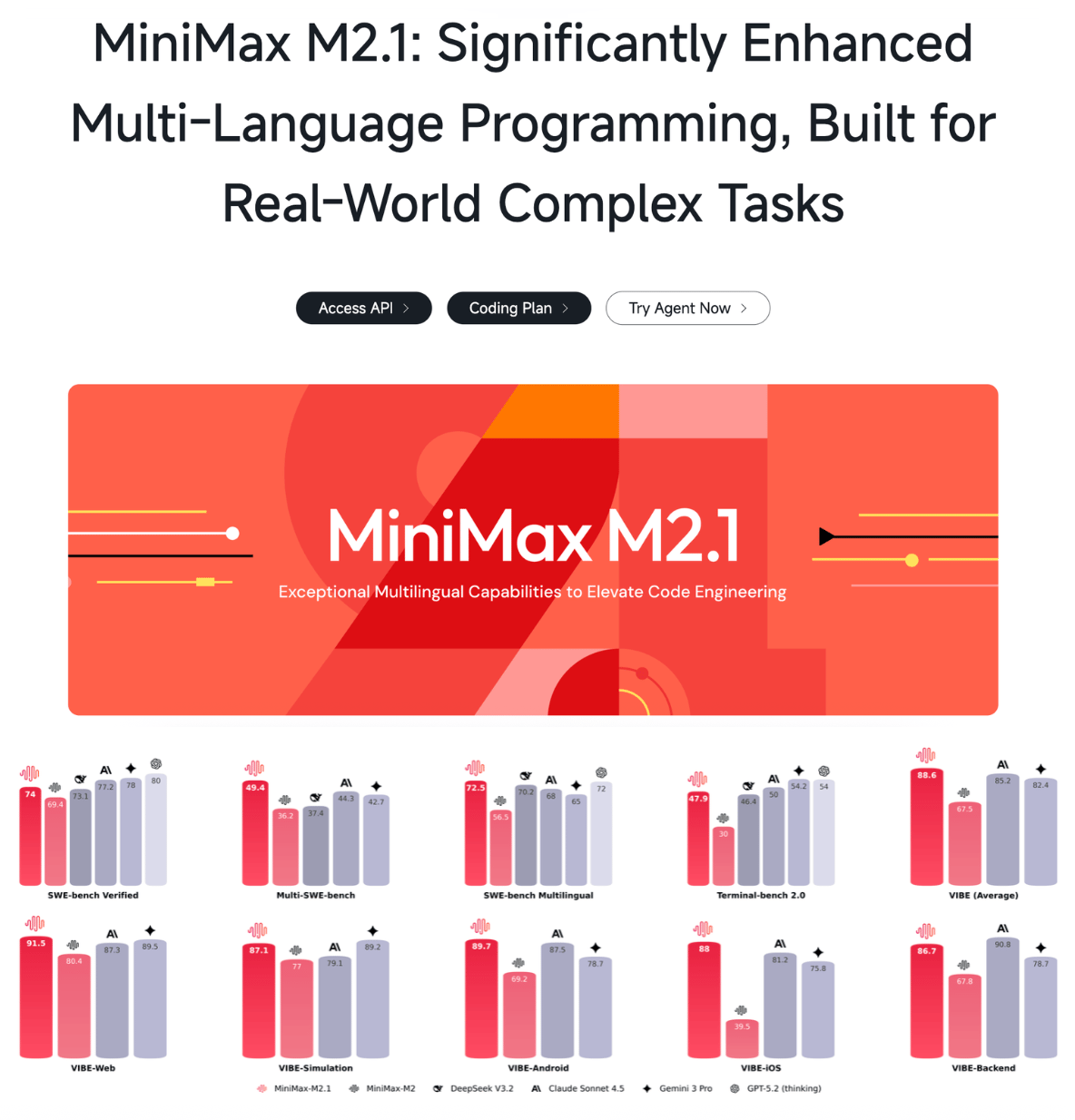

An open source 230B parameter model with only 10B active parameters just scored 74% on SWE-bench Verified and 49.4% on Multi-SWE-Bench, matching Claude Sonnet 4.5 across coding tasks.

MiniMax has released M2.1, an upgraded version of their open-source coding and agentic model that brings significant improvements in cross-language development, native mobile app creation, and office automation workflows.

The new model delivers cleaner outputs with reduced verbosity, faster response times, and lower token consumption. M2.1 works seamlessly across popular coding tools like Claude Code, Droid, Cline, and Kilo Code, and now comes with automatic caching enabled by default for even better performance.

Key Highlights:

Cross-Language Excellence - M2.1 achieves 72.5% on SWE-Multilingual benchmark, outperforming Claude Sonnet 4.5 in languages like Rust, Java, Golang, C++, Kotlin, and TypeScript; no Python-centric bias that’s there in many competitors.

Full-Stack App Development - Scored 88.6 on VIBE (Visual & Interactive Benchmark for Execution), a new benchmark that tests real application building from scratch. M2.1 excels at creating web interfaces with advanced aesthetics, native Android/iOS apps, 3D animations, and complex simulations.

Office Automation - The model can control browser interactions through text commands, completing end-to-end tasks like collecting equipment requests via Slack, retrieving prices from internal docs, calculating budgets, and updating records.

Availability - Comes in two API versions: standard M2.1 and M2.1-lightning (faster speeds, same results). The model weights are also open-source for local deployment and use.

Quick Bites

Google Disco to make your browser tabs work for you

Google says your browser tabs shouldn't just sit there; they should work for you. The company's new Labs experiment, Disco, introduces GenTabs: AI-generated web apps that spin up from your open tabs using Gemini 3. Instead of mentally juggling dozens of tabs while planning a trip or researching topics, GenTabs automatically creates interactive apps based on whatever you're working on, with absolutely no code and full source linking back to the original pages. You can sign up for the waitlist.

Drag-and-drop AI Agent Designer in Vertex AI

Google Cloud's new Agent Designer lets you build AI agents with drag-and-drop, then export the whole thing to code when you're ready to ship. It's a visual canvas where you can wire up flows, add subagents, connect tools (Google Search, Vertex AI Search, MCP servers), and test everything in a preview pane—no context switching between prototyping and production. Think of it as a halfway house between low-code speed and full code control, with ADK integration for when you want to take over manually.

Plan before you build in Gemini CLI with Conductor

Google's Gemini CLI now has Conductor, an extension that treats your project context as a managed artifact rather than ephemeral chat history. Instead of diving straight into code, it walks you through creating persistent specs and plans in Markdown files that live alongside your codebase, particularly useful for brownfield projects where AI tools typically stumble. The extension gives three new features/phases: /conductor:setup for project context, /conductor:newTrack for specs and planning, and /conductor:implement for execution.

Tools of the Trade

AGI Mobile - A voice-controlled AI agent that actually navigates your phone and gets things done for you, like booking Ubers, adjusting settings, handling messages, without you touching the screen. In Android preview now, iOS support coming soon.

Ensue Memory - A persistent context layer for Claude Code that stores your setup decisions, preferences, and conversation history across sessions using semantic and temporal search. It maintains a knowledge tree that carries forward past decisions, research, and patterns from previous conversations.

Amazon Bedrock AgentCore Samples - AWS's official sample repository for Amazon Bedrock AgentCore. Covers tutorials, use cases, and integrations covering AgentCore's core capabilities: Runtime, Gateway (API-to-MCP tool conversion), Memory, Identity, built-in tools, and Observability.

Worktrunk - A CLI for git worktree management for running AI agents in parallel. Its three core commands make worktrees as easy as branches. Plus, it has a bunch of quality-of-life features to simplify working with many parallel changes, including hooks to automate local workflows.

Awesome LLM Apps - A curated collection of LLM apps with RAG, AI Agents, multi-agent teams, MCP, voice agents, and more. The apps use models from OpenAI, Anthropic, Google, and open-source models like DeepSeek, Qwen, and Llama that you can run locally on your computer.

(Now accepting GitHub sponsorships)

Hot Takes

You can't finetune a Chinese model and claim that as a contribution to the urgent need for more Western open weight models.

Yes, people are doing that.

AGI fully realized will actually give people a choice:

relax

or

work harder on bigger more ambitious things than you ever thought possible

~ Garry Tan

That’s all for today! See you tomorrow with more such AI-filled content.

Don’t forget to share this newsletter on your social channels and tag Unwind AI to support us!

PS: We curate this AI newsletter every day for FREE, your support is what keeps us going. If you find value in what you read, share it with at least one, two (or 20) of your friends 😉

Reply