- unwind ai

- Posts

- Build AI Agents in Just 3 Lines of Code

Build AI Agents in Just 3 Lines of Code

PLUS: Grok 4 Heavy reasoning with any LLM, Llama 4 Behemoth shelved

Today’s top AI Highlights:

AI Agents with emergent behaviour in just 3 lines of code

Simulate Grok 4 Heavy reasoning with any other LLM

Is the Opensource Llama series dead?

Mistral AI opensources frontier speech recognition models

Email for AI agents, not AI agents for emails

& so much more!

Read time: 3 mins

AI Tutorial

Business consulting has always required deep market knowledge, strategic thinking, and the ability to synthesize complex information into actionable recommendations. Today's fast-paced business environment demands even more - real-time insights, data-driven strategies, and rapid response to market changes.

In this tutorial, we'll create a powerful AI business consultant using Google's Agent Development Kit (ADK) combined with Perplexity AI for real-time web research. This consultant will conduct market analysis, assess risks, and generate strategic recommendations backed by current data, all through a clean, interactive web interface.

We share hands-on tutorials like this every week, designed to help you stay ahead in the world of AI. If you're serious about leveling up your AI skills and staying ahead of the curve, subscribe now and be the first to access our latest tutorials.

Latest Developments

You know that feeling when you want Grok Heavy's multi-agent magic but can't justify the $300/month price tag?

A developer just dropped Make it Heavy, a Python framework that replicates that exact functionality with any LLM model you want via OpenRouter. You can now run parallel AI agents using Kimi K2, GPT-4o, Llama 3, Claude, Gemini, or any of the 300+ models available on OpenRouter.

The framework orchestrates multiple agents that run in continuous loops until task completion. When you submit a query, the system generates specialized research questions, deploys multiple agents to investigate different aspects simultaneously, then synthesizes their findings into comprehensive responses.

Key Highlights:

Multi-agent orchestration - Deploy 4+ specialized agents in parallel with real-time progress tracking, then synthesize their different perspectives into unified comprehensive analysis without monthly subscription fees.

Hot-swappable architecture - Automatically discovers tools from the tools directory and supports dynamic model switching through simple config changes, making experimentation effortless.

Dual operation modes - Run single agents for simple tasks or full multi-agent orchestration for complex analysis, with built-in tools for web search, calculations, and file operations.

Model support - Choose from 300+ LLM models on OpenRouter including Gemini, Kimi K2, and Llama variants, giving you the flexibility to optimize for cost, speed, or reasoning capability.

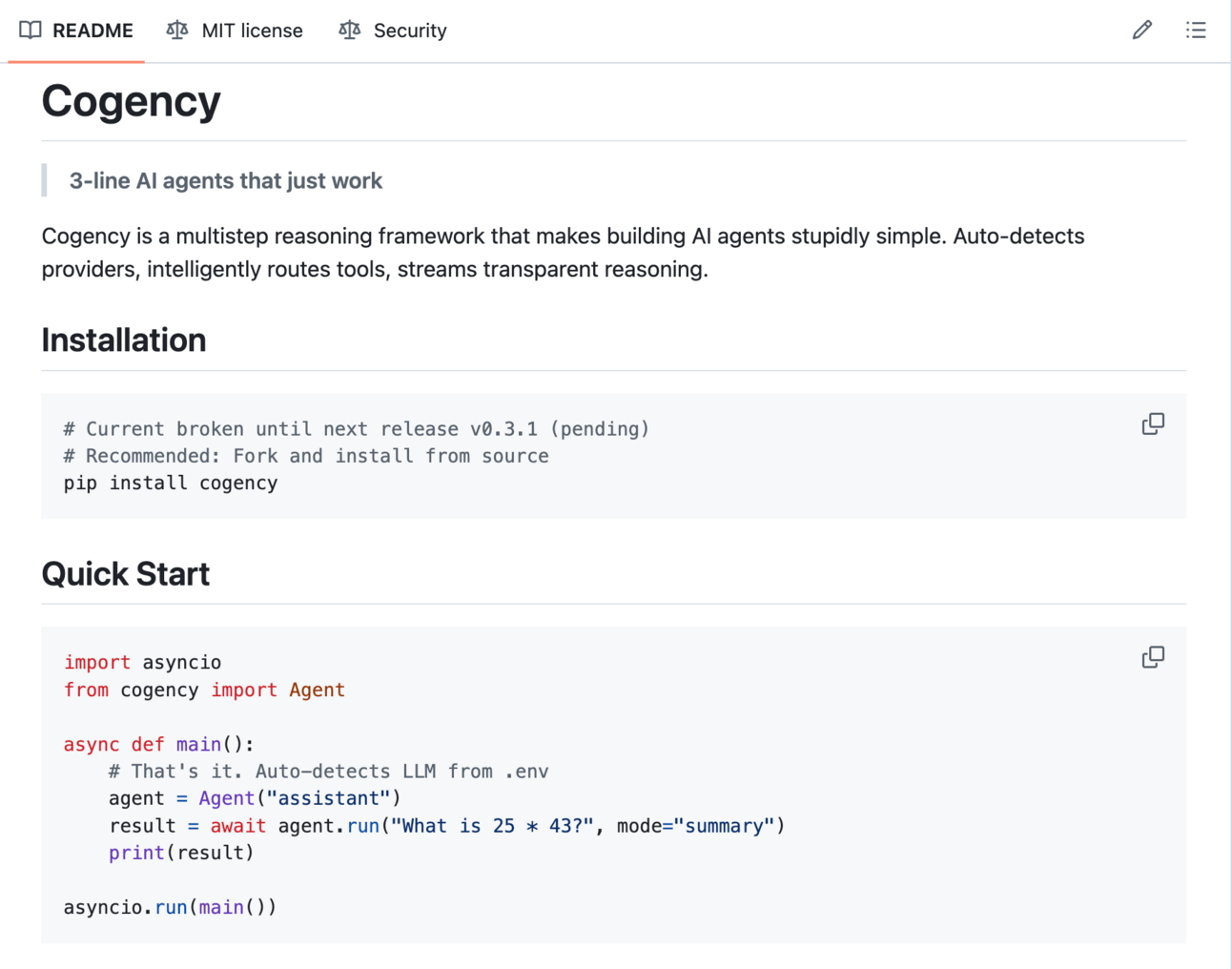

Someone just figured out how to make AI agents that actually work in 3 lines of code.

Cogency is a multistep reasoning framework that makes building AI agents stupidly simple. Its cognitive architecture separates reasoning from tool orchestration - something most frameworks completely mess up by tangling everything together.

When a web search tool was added to test extensibility, the agent failed its first search, reflected on the poor results, adapted its query strategy, and succeeded on retry without any manual intervention. This behavior wasn’t programmed; it emerged naturally from the architecture.

The breakthrough isn't another memory system, it's cognitive separation that enables true composability. While other frameworks force you to rebuild agents when adding new capabilities, Cogency's architecture makes tools plug-and-play at the reasoning level.

Key Highlights:

Emergent adaptation - Agents naturally develop retry strategies and query refinement behaviors through the reflection loop, demonstrating genuine problem-solving rather than scripted responses to common failure modes.

Cognitive composability - Separating reasoning operations from tool execution means new capabilities integrate seamlessly without breaking existing agent behavior or requiring architecture changes.

Zero-config intelligence - Auto-detects LLM providers and implements intelligent tool filtering that prevents prompt bloat, solving the "too many tools" problem that breaks most production agents.

Stream-native execution - The agent's reasoning process streams in real-time through async generators, making thought transparency a core feature rather than an observability afterthought.

Quick Bites

The AI world's biggest open-source model might never see daylight. Meta has quietly shelved Llama 4 Behemoth. According to recent reports, the model suffered from chunked attention implementation issues that created reasoning blind spots, particularly at block boundaries where logical chains of thought would break. Meta's new superintelligence lab is now reportedly building a closed-source alternative.

Llama was the greatest thing that happened to opensource AI, and now China is taking over this space. It will be interesting to see how this dynamic pans out.

Mistral AI just released Voxtral, frontier opensource speech recognition models that don't just transcribe audio - they understand it, answer questions about it, and can trigger functions directly from voice commands. Available in 3B and 24B parameter versions under Apache 2.0 license, these models handle 30-40 minute audio files with multilingual detection across major languages.

Outperform Whisper Large-v3, GPT-4o mini, and Gemini 2.5 Flash across transcription tasks

Built-in Q&A and summarization without chaining separate ASR and LLM

Direct triggering of backend APIs and workflows based on spoken commands

Delivers premium performance at less than half the price of comparable proprietary APIs.

Google’s Firebase Studio now comes with an autonomous Agent mode that can build entire apps from a single prompt. The new mode offers 3 interaction levels -from conversational brainstorming to fully autonomous development where Gemini codes across multiple files, writes tests, and fixes errors independently. Google's also rolling out MCP support and direct Gemini CLI integration to extend workspace capabilities.

Google is handing out year-long Gemini Pro subscriptions to all Indian college students for free. Claim a full year of Gemini Pro with advanced reasoning, longer context windows, and priority access to new features.

Tools of the Trade

AgentMail: Provides AI agents with dedicated email inboxes through an API that bypasses the limitations of consumer email services. It’s much better than Gmail, with automated inbox management, unrestricted sending limits, and built-in features like semantic search and structured data extraction.

Microsoft Learn Docs MCP Server: Enables MCP clients like Cursor and GitHub Copilot to get trusted and up-to-date information directly from Microsoft's official docs. It is a remote MCP Server using streamable HTTP, which is lightweight for clients to use.

LLM Gateway: Opensource API gateway that provides a unified interface for routing requests across multiple LLM providers like OpenAI, Anthropic, and Google Vertex AI. It handles API key management, usage tracking, cost analysis, and performance monitoring through a single endpoint compatible with OpenAI's API format.

RepoMapr: Generate interactive visual diagrams of any GitHub repositories using AI. You can click on nodes to view code and chat with AI to understand how the codebase functions.

Awesome LLM Apps: Build awesome LLM apps with RAG, AI agents, MCP, and more to interact with data sources like GitHub, Gmail, PDFs, and YouTube videos, and automate complex work.

Hot Takes

Calling it now, Elon will release the first NSFW video model with Grok.

Only Elon can do this. ~

Ashutosh ShrivastavaAll the technical language around AI obscures the fact that there are two paths to being good with AI:

1) Deeply understanding LLMs

2) Deeply understanding how you give people instructions & information they can act on.

LLMs aren’t people but they operate enough like it to work ~

Ethan Mollick

That’s all for today! See you tomorrow with more such AI-filled content.

Don’t forget to share this newsletter on your social channels and tag Unwind AI to support us!

PS: We curate this AI newsletter every day for FREE, your support is what keeps us going. If you find value in what you read, share it with at least one, two (or 20) of your friends 😉

Reply