- unwind ai

- Posts

- Claude Sonnet-Level Agent but 90% Cheaper

Claude Sonnet-Level Agent but 90% Cheaper

+ Train your own LLM in 60 seconds, Kimi CLI Agent in 100% Python

Today’s top AI Highlights:

& so much more!

Read time: 3 mins

AI Tutorial

SEO optimization is both critical and time-consuming for teams building businesses. Manually auditing pages, researching competitors, and synthesizing actionable recommendations can eat up hours that you'd rather spend strategizing.

In this tutorial, we'll build an AI SEO Audit Team using Google's Agent Development Kit (ADK) and Gemini 2.5 Flash. This multi-agent system autonomously crawls any webpage, researches live search results, and delivers a polished optimization report through a clean web interface that traces every step of the workflow.

We share hands-on tutorials like this every week, designed to help you stay ahead in the world of AI. If you're serious about leveling up your AI skills and staying ahead of the curve, subscribe now and be the first to access our latest tutorials.

Latest Developments

A compact model that matches frontier intelligence while burning 92% less cash per API call just dropped with full open weights.

Shanghai-based MiniMax has released MiniMax-M2, an open-source 230-billion-parameter MoE model that activates only 10 billion parameters during inference, delivering Claude Sonnet-level intelligence at 8% of the price with roughly 2x the speed.

The architecture delivers top-tier coding and agentic performance while maintaining the lean profile needed for practical deployment, meaning developers can actually afford to run complex agent workflows without watching their API bills explode.

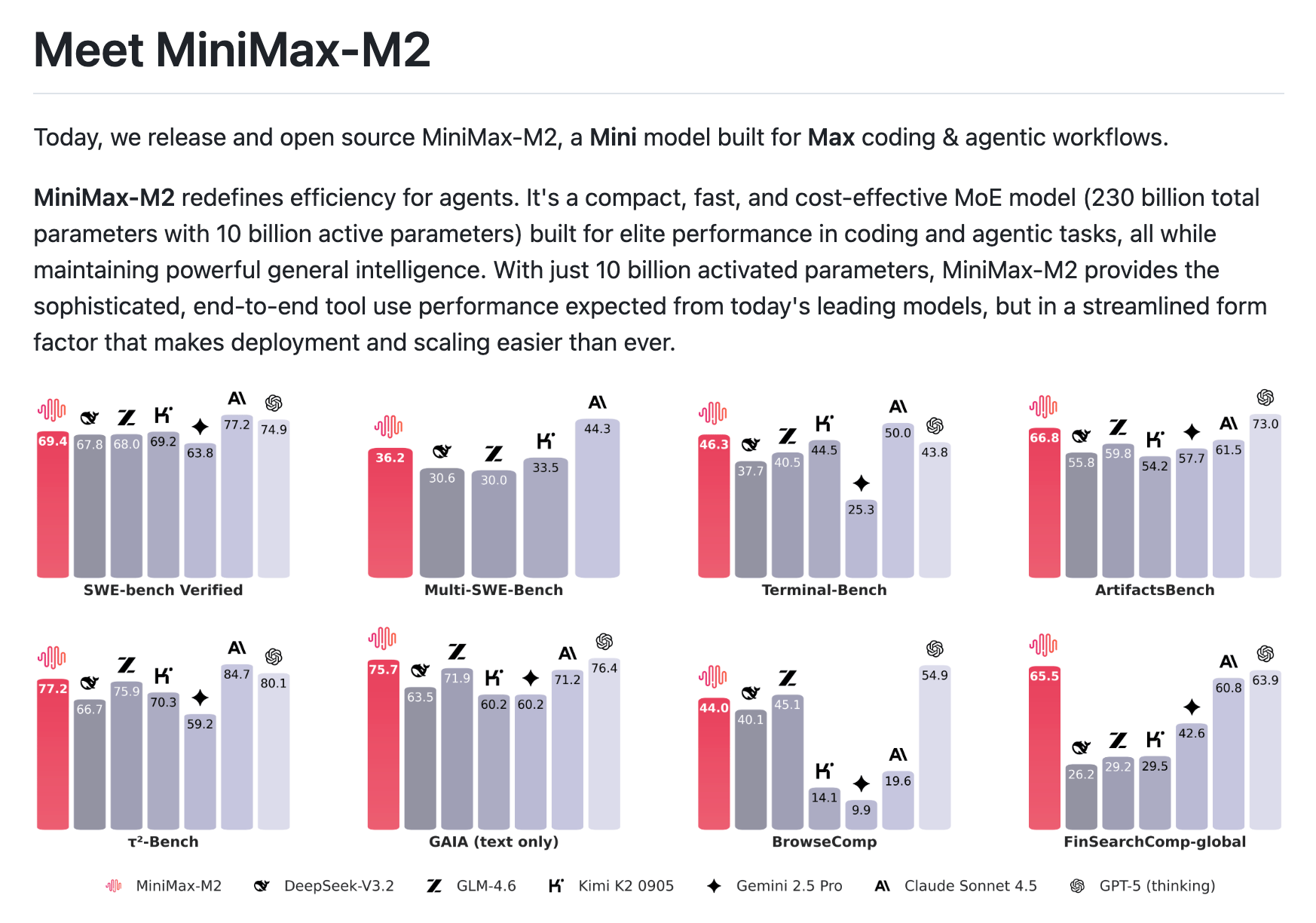

M2 ranks #1 among open-source models on Artificial Analysis's composite intelligence benchmark and outperforms several closed models on real-world coding benchmarks like SWE-bench and Terminal-Bench.

The model is free to use via API and the MiniMax Agent platform through November 7th!!

Key Highlights:

Coding capabilities - Strong performance on Terminal-Bench (46.3%), SWE-Bench Verified (69.4%), and ArtifactsBench (66.8%) shows practical coding ability across real repositories, command execution, and artifact generation. The model handles compile-test-debug cycles and multi-file edits effectively.

Tool orchestration - Excels at planning and executing complex workflows involving shell, browser, Python interpreters, and MCP tools. On BrowseComp evaluations, M2 navigates web interfaces, tracks evidence sources, and recovers from failures during long-running tasks.

Economic efficiency - The 10-billion active parameter design enables faster feedback cycles, more concurrent runs per dollar, and simpler capacity planning compared to fully-activated large models. This architecture directly addresses the compute overhead problem in agent loops.

Multiple access paths - The general-purpose Agent product, MiniMax Agent, powered by MiniMax-M2, and the API are now available for free for a limited time. Open weights on Hugging Face with SGLang/vLLM compatibility for self-hosting.

The AI Insights Every Decision Maker Needs

You control budgets, manage pipelines, and make decisions, but you still have trouble keeping up with everything going on in AI. If that sounds like you, don’t worry, you’re not alone – and The Deep View is here to help.

This free, 5-minute-long daily newsletter covers everything you need to know about AI. The biggest developments, the most pressing issues, and how companies from Google and Meta to the hottest startups are using it to reshape their businesses… it’s all broken down for you each and every morning into easy-to-digest snippets.

If you want to up your AI knowledge and stay on the forefront of the industry, you can subscribe to The Deep View right here (it’s free!).

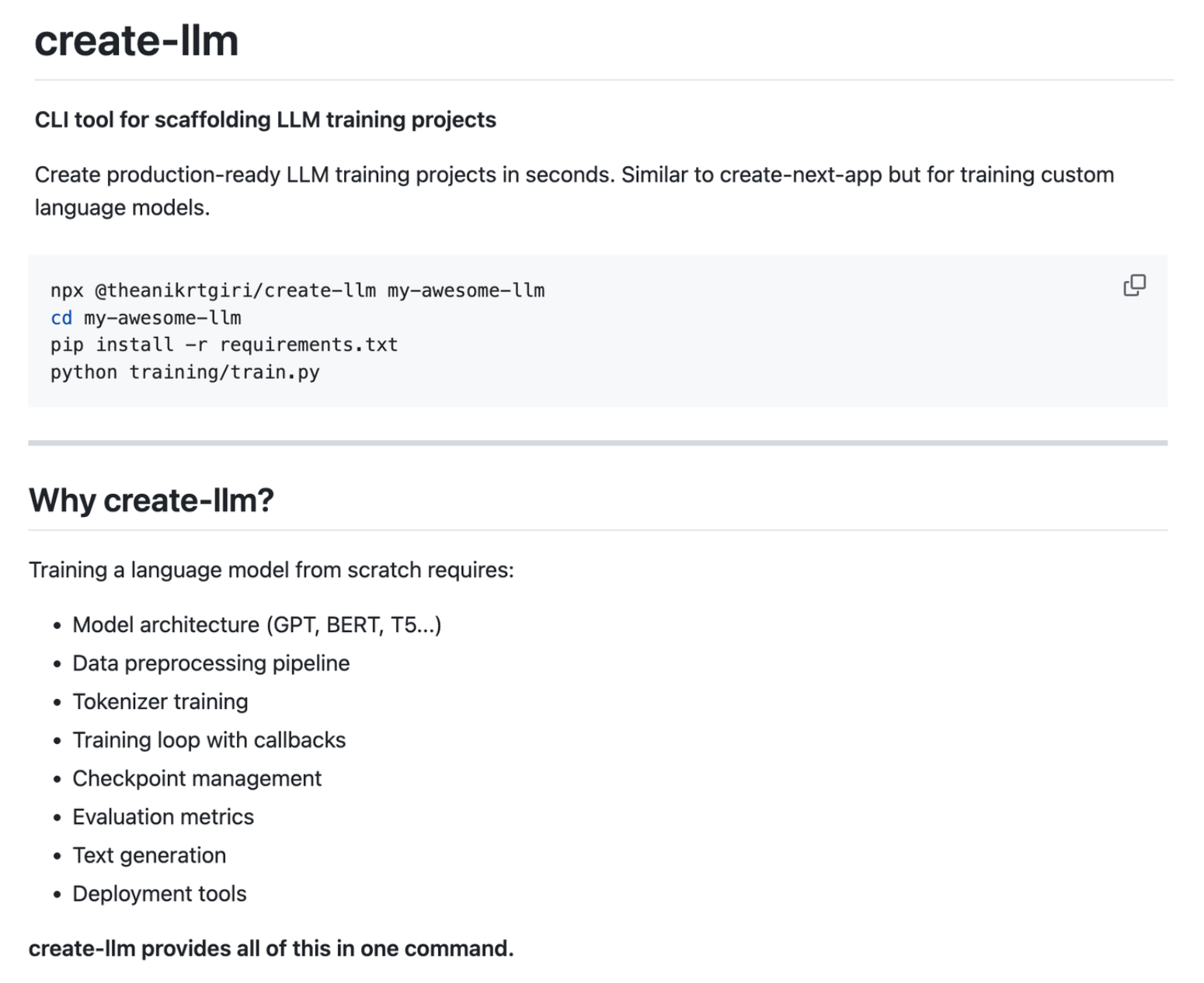

What if training an LLM was as simple as spinning up a Next.js app?

A third-year student spent six hours at 2 AM drowning in fragmented tutorials that demanded 47 manual dependencies, assumed you owned $10k worth of GPUs, or just stopped mid-tutorial with "good luck."

That frustration led to create-llm – a CLI tool that scaffolds complete LLM training projects instantly. One command gives you model architectures, tokenizer training, data pipelines, checkpoints, evaluation metrics, and deployment tools, all configured and ready.

Run npx create-llm my-llm, pick a template (from 1M to 1B parameters), and you're training in minutes – no manual dependency installation, no GPU requirements for learning, just a complete training infrastructure ready to go.

Key Highlights:

Educational by design - The nano template (1M params, 2-minute training) intentionally exposes model collapse and overfitting on limited data, turning bugs into learning moments. The tool provides diagnostic messages, suggests fixes like adding data or reducing model size, and helps you understand training dynamics through hands-on experience.

Auto-configuration - Detects vocab size from your tokenizer instead of using hardcoded values, handles sequence length mismatches automatically, manages cross-platform path issues, and provides detailed error diagnostics. The config warns when your model is too large for your dataset size before you start training.

Integrated dev tools - Launch a live Flask dashboard during training to monitor loss curves and metrics in real-time, compare multiple model checkpoints side-by-side, use the interactive chat interface to test generations, and deploy directly to HuggingFace with one script.

Hardware flexibility - Start prototyping with nano/tiny templates on any CPU with 2-4GB RAM, scale to production with the small template on an RTX 3060, or go full research mode with the base template on A100s. The same codebase works across all scales with template-specific optimizations already configured.

Quick Bites

Moonshot AI releases Kimi CLI Agent written 100% in Python

Moonshot AI has released Kimi CLI AI agent for your terminal, built entirely in Python -an interesting departure from the Rust and Go implementations we've seen lately. It handles coding tasks, doubles as a shell with mode switching via Ctrl-K, and ships with both Agent Client Protocol (communication between agents and IDEs) and MCP support out of the box. Currently, macOS and Linux only, and the Python implementation makes it approachable for anyone who wants to fork it or see how these agents actually work under the hood.

Anthropic brings Claude into Excel for financial analysis

Anthropic launched Claude for Excel, letting the model read and edit spreadsheets directly in a sidebar with full formula transparency. The update includes eight new data connectors: LSEG for live market data, Chronograph for PE portfolio tracking, Moody's for credit intelligence, and six pre-built skills that automate analyst grunt work like building DCF models, parsing due diligence documents, and writing coverage reports. It's currently in beta for 1,000 users before wider rollout.

Thinking Machines’ On-policy Distillation combines RL and SFT

Mira Murati’s Thinking Machines has released another very interesting study on on-policy distillation, a training method that blends the strengths of reinforcement learning and distillation. Instead of only imitating a teacher or only learning from end rewards, it samples outputs directly from a student model, then uses a larger teacher model to grade every single token, essentially providing real-time feedback on which reasoning steps went wrong, not just whether the final answer was correct.

This method proved remarkably efficient: the team achieved 70% accuracy on AIME'24 math problems using 9-30x less compute than standard approaches, and managed to train assistant models that retain both specialized knowledge and instruction-following abilities without the usual trade-offs.

The team has released this implementation in their Tinker cookbook, where you essentially modify one line in the existing RL code by swapping the regularizer model.

Tools of the Trade

Twigg - A non-linear interface for LLM chats where conversations branch like Git commits, letting you explore different paths and maintain long-term projects. You can fork conversations, visualize their chat history as a tree diagram, and manually control which context gets passed to the model

oxdraw - A CLI tool that generates Mermaid diagrams and lets you visually edit them for repositioning nodes and styling edges. The visual edits get written back to the source file as comments, preserving both version control and standard Mermaid compatibility.

DeepFabric - A CLI tool and SDK to generate synthetic training datasets using LLMs through a hierarchical topic tree or graph architecture. It outputs in OpenAI standard instruct format for model distillation, evaluation benchmarks, and fine-tuning. Supports multiple LLMs (OpenAI, Anthropic, Gemini, Ollama).

Awesome LLM Apps - A curated collection of LLM apps with RAG, AI Agents, multi-agent teams, MCP, voice agents, and more. The apps use models from OpenAI, Anthropic, Google, and open-source models like DeepSeek, Qwen, and Llama that you can run locally on your computer.

(Now accepting GitHub sponsorships)

Hot Takes

It’s hard to explain how wide of a gap there is between everything we’re seeing and talking about here and what the rest of the world is doing.

There’s usually at least a ~6 month lag for ideas or trends to escape X, and then it’s another couple years for any real adoption to occur.

~ Aaron Leviecrazy they called it context rot and not attention deficit hyperactivity disorder

~ Omar Khattab

That’s all for today! See you tomorrow with more such AI-filled content.

Don’t forget to share this newsletter on your social channels and tag Unwind AI to support us!

PS: We curate this AI newsletter every day for FREE, your support is what keeps us going. If you find value in what you read, share it with at least one, two (or 20) of your friends 😉

Reply