- unwind ai

- Posts

- Context-Aware AI Coworker

Context-Aware AI Coworker

+ xAI Grok 4.1 Fast and the Agent Tools API

Today’s top AI Highlights:

& so much more!

Read time: 3 mins

AI Tutorial

Google's free 5-day AI Agents Intensive course ends today!

This course by Google's ML researchers covers everything from agent foundations to production-ready systems.

And they released 5 whitepapers (~300 pages total) that are staying up for free.

Here's what the whitepapers cover:

The 5 levels of agents: from pure reasoning to self-evolving systems

Core components: Model (the brain), Tools (the hands), Orchestration (the nervous system)

Moving from prototype to enterprise-grade systems

How tools actually work and why descriptions matter more than code

The Model Context Protocol architecture explained

Security risks nobody talks about: dynamic capability injection, tool shadowing, confused deputy problems

The difference between prompt engineering vs context engineering

Session management: conversation history + working memory

Memory as an active curation system, not just "save the conversation"

The four pillars: Effectiveness, Efficiency, Robustness, Safety

Process evaluation: judging reasoning, not just outputs

Building agents that learn from production failures

Evaluation gates, circuit breakers, and evolution loops

Turning demos into production systems

Real-time monitoring and continuous evaluation

The best part?

All whitepapers are 100% free and packed with zero fluff.

Latest Developments

AI agents fail at real work because they're given access to everything - all 20+ integrations, every possible tool, mountains of context - and expected to figure out what's relevant on the fly.

They’re choking with all the noise.

This AI platform solves the context problem. Meet Dimension.dev, a collaboration platform for engineering teams with AI agents that understand you, your team, and get your work done across all your tools like Gmail, Slack, GitHub, Linear, Drive, and more.

It has a completely different architecture: before your agent touches anything, the platform runs three parallel analyses to identify which 3-5 integrations actually have relevant information, which tools the agent needs, and what learned behaviors apply to your task.

The interface is so natural that it feels like messaging another co-worker. Think ad-hoc tasks like “Look at all the customer bug reports from yesterday, create a Linear ticket, and send them an email with the tracking ID” or workflows like “When we get deployment failures from Vercel, create a ticket and post a debug summary to #deployments.”

Here’s How it Works:

Task Routing - A Decision Maker agent analyzes incoming requests and intelligently routes them: Simple Agent handles single-turn tasks like sending emails or creating calendar events, while Complex Agent orchestrates multi-step workflows like reviewing PRs, analyzing sales data, and executing conditional logic based on real-time context.

Pre-Execution Checks - Before agents start working on tasks, the platform runs three parallel analyses: RAG to identify which connected apps (Gmail, Notion, Linear, GitHub, Drive, Slack) contain relevant data, graph memory predicts needed tools from past behavior patterns, and Skills collector retrieves learned workflows.

Creating Workflows - You can also build recurring automations by writing what you want in simple text: "every time a customer sends a bug report, create a Linear ticket, reply with tracking ID, and add gathered info as a comment". The platform automatically figures out how to chain actions, configure agent parameters, and set up integration triggers.

In-House Integrations - Every major integration is custom-built with platform-specific logic instead of generic MCP servers. For example, Gmail ranks messages by importance and generates action items, and Linear tracks cycle metrics and creates tickets from emails.

Self-Learning Agent Skills - You can teach these agents your patterns and style, and they can retrieve them in the future. For example, write "learn my PR review process" and the agent analyzes your past reviews, generates documentation, stores it with embeddings, then automatically surfaces relevant patterns in future code reviews.

Dimension.dev is completely free to try. Connect your apps, create workflows from scratch or use pre-built ones to get started, and get your work done!

Voice AI Goes Mainstream in 2025

Human-like voice agents are moving from pilot to production. In Deepgram’s 2025 State of Voice AI Report, created with Opus Research, we surveyed 400 senior leaders across North America - many from $100M+ enterprises - to map what’s real and what’s next.

The data is clear:

97% already use voice technology; 84% plan to increase budgets this year.

80% still rely on traditional voice agents.

Only 21% are very satisfied.

Customer service tops the list of near-term wins, from task automation to order taking.

See where you stand against your peers, learn what separates leaders from laggards, and get practical guidance for deploying human-like agents in 2025.

There’s a major problem with connecting to multiple MCP servers. Each server needs individual configuration, credentials, and maintenance.

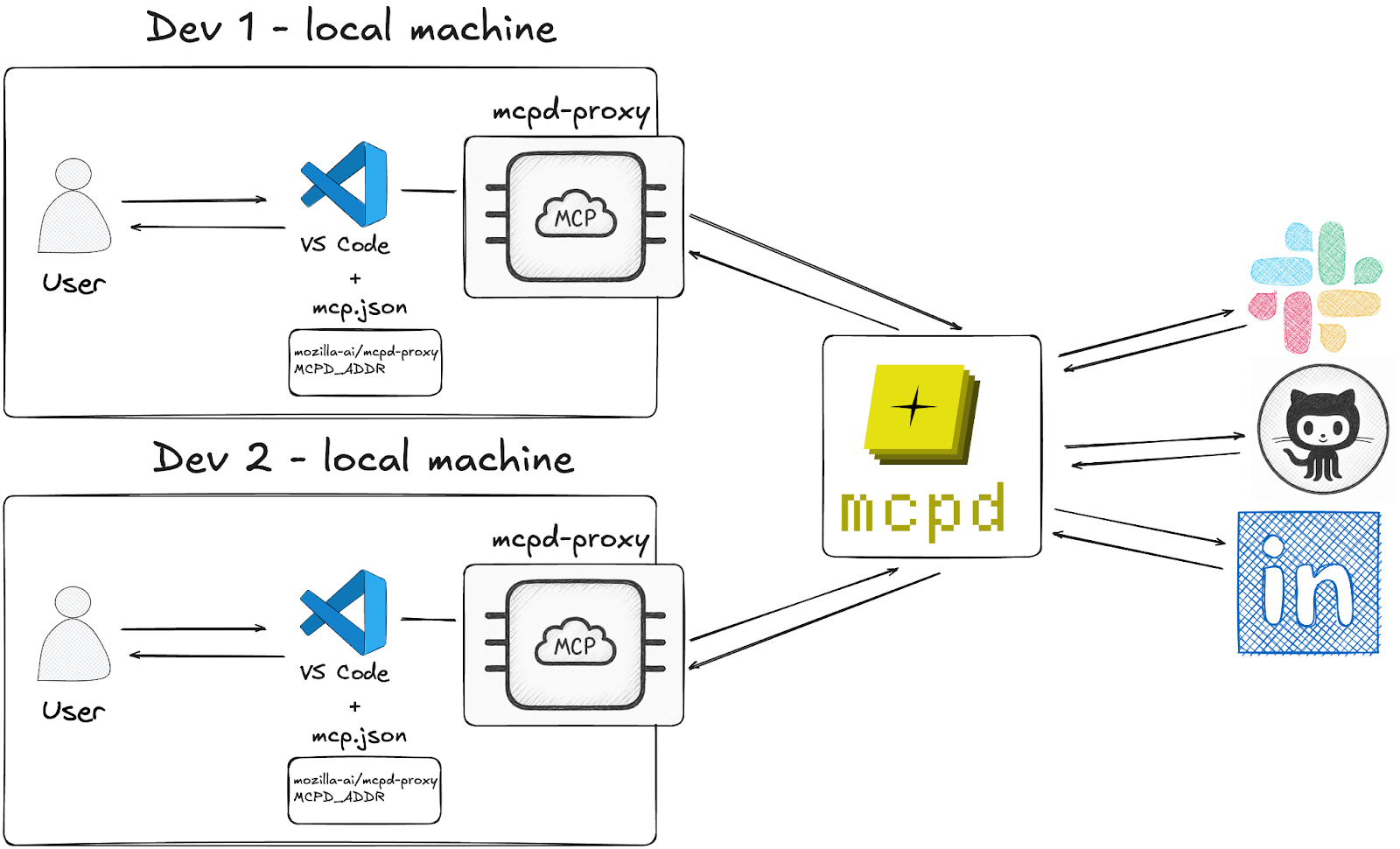

Mozilla.ai released mcpd a while back to fix this. It's basically requirements.txt for agent tooling, letting you declare all your MCP servers in one config file. But here's the catch: even with mcpd running centrally, every developer still had to manually configure each MCP server in their IDE.

A Principal Data Scientist at Microsoft's AI Consulting Practice put it perfectly when they told the Mozilla team: "I need a way to provide a URL and an integration to my team, so they can use all the tools they need in their daily development workflows via VS Code."

That feedback sparked mcpd-proxy, which solves that "last mile" problem. It's an MCP server that connects to your mcpd instance and exposes everything through a single endpoint. Developers add one entry to their IDE config with a URL and API key, and they instantly get access to every tool the platform team has provisioned - GitHub, Slack, databases, whatever. When you add a new server to mcpd, it automatically shows up in everyone's IDE.

Key Highlights:

Single configuration replaces dozens - Add mcpd-proxy to your IDE's mcp.json with your team's mcpd URL. That's it. Every MCP server your platform team manages becomes available immediately, with automatic updates when new servers are added.

Tools coordinate automatically - Ask your agent to "check PRs waiting over 5 hours and post to Slack," and it seamlessly calls github__list_pull_requests and slack__post_message. The proxy handles namespacing so nothing collides.

Centralized platform control - Platform teams manage which tools are available, server versions, access policies, and monitoring from one place. Developers just get a URL that works across local dev and production.

Best for - If you're managing shared developer infrastructure and tired of every engineer maintaining separate MCP configs, or if you want centralized control with zero-config onboarding for your team, mcpd-proxy fits right in.

Quick Bites

OpenAI drops GPT-5.1-Codex-Max, outperforming Gemini 3

AI can be the only field where every day comes with a new state-of-the-art. OpenAI released GPT-5.1-Codex-Max, its new agentic coding model that already outperforms Gemini 3 on SWE-Bench-Verified and Terminal-Bench. Built specifically for long-running, multi-step tasks, the model is trained on agentic tasks across software engineering, math, research, and more. It is faster, more intelligent, and more token-efficient at every stage of the development cycle. Now available on Codex everywhere (CLI, IDE extension, cloud, and code review), for all users except Free. Coming soon in the API.

Meta SAM 3 to segment objects from simple prompts

Meta just introduced SAM 3, a model that lets you use text prompts like “yellow school bus” to segment, track, and analyse objects across images and videos. It also launched SAM 3D, which can reconstruct 3-D objects or scenes from a single image. SAM 3 is state-of-the-art across all text and visual segmentation tasks in both images and videos. Besides vision or AR/VR workflows, this is great for even creative workflows like video editing. You can try both models on the SAM Playground, and the model weights are available to download. SAM 3 is also coming soon to Instagram Edits and Vibes on the Meta AI app.

xAI Grok 4.1 Fast and the Agent Tools API

xAI has released Grok 4.1 Fast, their best tool-calling model with a 2M context window. The model was trained on various tool-calling domains, giving it exceptional agentic tool use capabilties, outperforming Gemini 3 Pro and GPT-5.1. Besides this, xAI has also released Agent Tools API, a suite of server-side tools that allow Grok 4.1 Fast to operate as a fully autonomous agent. With just a few lines of code, you can enable Grok to browse the web, search X posts, execute code, retrieve uploaded documents, and more.

Tools of the Trade

Karpathy - An open-source agentic ML engineer that autonomously trains models using Claude Code SDK, Google ADK, and Claude Scientific Skills, with support for both fully automated and interactive workflows. It runs locally with Claude Code and an OpenRouter API key.

Mosaic - Agentic video editing platform that uses multimodal AI agents to automate editing workflows like removing bad takes and creating clips from long-form content, in a node-based UI. The canvas will run your video editing on autopilot, and get you 80-90% of the way there. You can also branch and run edits in parallel to create multiple variants.

pyscn - A code quality analyzer for Python vibe coders. It is built with Go and tree-sitter, that performs static analysis to detect complexity issues, dead code, duplicates, and dependency coupling.

Awesome LLM Apps - A curated collection of LLM apps with RAG, AI Agents, multi-agent teams, MCP, voice agents, and more. The apps use models from OpenAI, Anthropic, Google, and open-source models like DeepSeek, Qwen, and Llama that you can run locally on your computer.

(Now accepting GitHub sponsorships)

Hot Takes

re: gemini 3

for the past 4 years i've had the plurality of our liquid net worth in NVDA

about a month ago i sold it all and rotated into GOOG

take from that what you will

~ Siqi ChenIf you’re a software engineer, pivot to being CEO

the best model right now was not trained on nvidia chips.

that statement still feels really weird to say.

~ signüll

That’s all for today! See you tomorrow with more such AI-filled content.

Don’t forget to share this newsletter on your social channels and tag Unwind AI to support us!

PS: We curate this AI newsletter every day for FREE, your support is what keeps us going. If you find value in what you read, share it with at least one, two (or 20) of your friends 😉

Reply