- unwind ai

- Posts

- Claude 4-level Opensource Coding Model from China

Claude 4-level Opensource Coding Model from China

PLUS: Truly agentic vibe coding platform, One interface for 20+ LLM providers

Today’s top AI Highlights:

First truly agentic vibe coding platform for serious builders

Opensource Qwen3 Coder beats DeepSeek R1, Kimi k2, Gemini 2.5 Pro

Switch between 20+ LLM providers with just one line of code

Open agentic‑search model that can even run on your phone

Turn any domain into an AI Agent’s inbox

& so much more!

Read time: 3 mins

AI Tutorial

Business consulting has always required deep market knowledge, strategic thinking, and the ability to synthesize complex information into actionable recommendations. Today's fast-paced business environment demands even more - real-time insights, data-driven strategies, and rapid response to market changes.

In this tutorial, we'll create a powerful AI business consultant using Google's Agent Development Kit (ADK) combined with Perplexity AI for real-time web research. This consultant will conduct market analysis, assess risks, and generate strategic recommendations backed by current data, all through a clean, interactive web interface.

We share hands-on tutorials like this every week, designed to help you stay ahead in the world of AI. If you're serious about leveling up your AI skills and staying ahead of the curve, subscribe now and be the first to access our latest tutorials.

Latest Developments

Vibe coding space is cluttered with 100s of tools with jaw-dropping demos, but need months of developer work to become real products.

Emergent is a fully-agentic vibe coding platform that builds full-stack apps (frontend + Python backend) from a single sentence. It goes beyond pretty mockups and actually ships production-ready applications that can handle real users and real revenue from day one.

Think complete systems with databases, APIs, native Google Auth, and scalable infrastructure from just one prompt.

Build AI-powered SaaS tools, marketplaces, autonomous agents, MCP servers, dashboards, iOS and Android applications, browser extensions - basically anything - all with the conversational simplicity that makes vibe coding so appealing.

Key Highlights:

Complete System Architecture - Automatically configures databases, servers, third-party integrations, and handles the complex wiring between frontend and Python backend without manual setup.

True Agentic Behavior - The platform proactively builds, iterates, and problem-solves without micromanagement, functioning more like an autonomous engineering partner than a coding assistant.

Enterprise-Ready Features - Built-in Google Auth integration, LLM credits without personal API keys, automated security reviews, and scalability assessments ensure professional-grade applications.

System Prompt - You can modify the underlying system prompts that drive Emergent's development agents. This allows fine-tuned customization of coding style, architecture preferences, and development approaches.

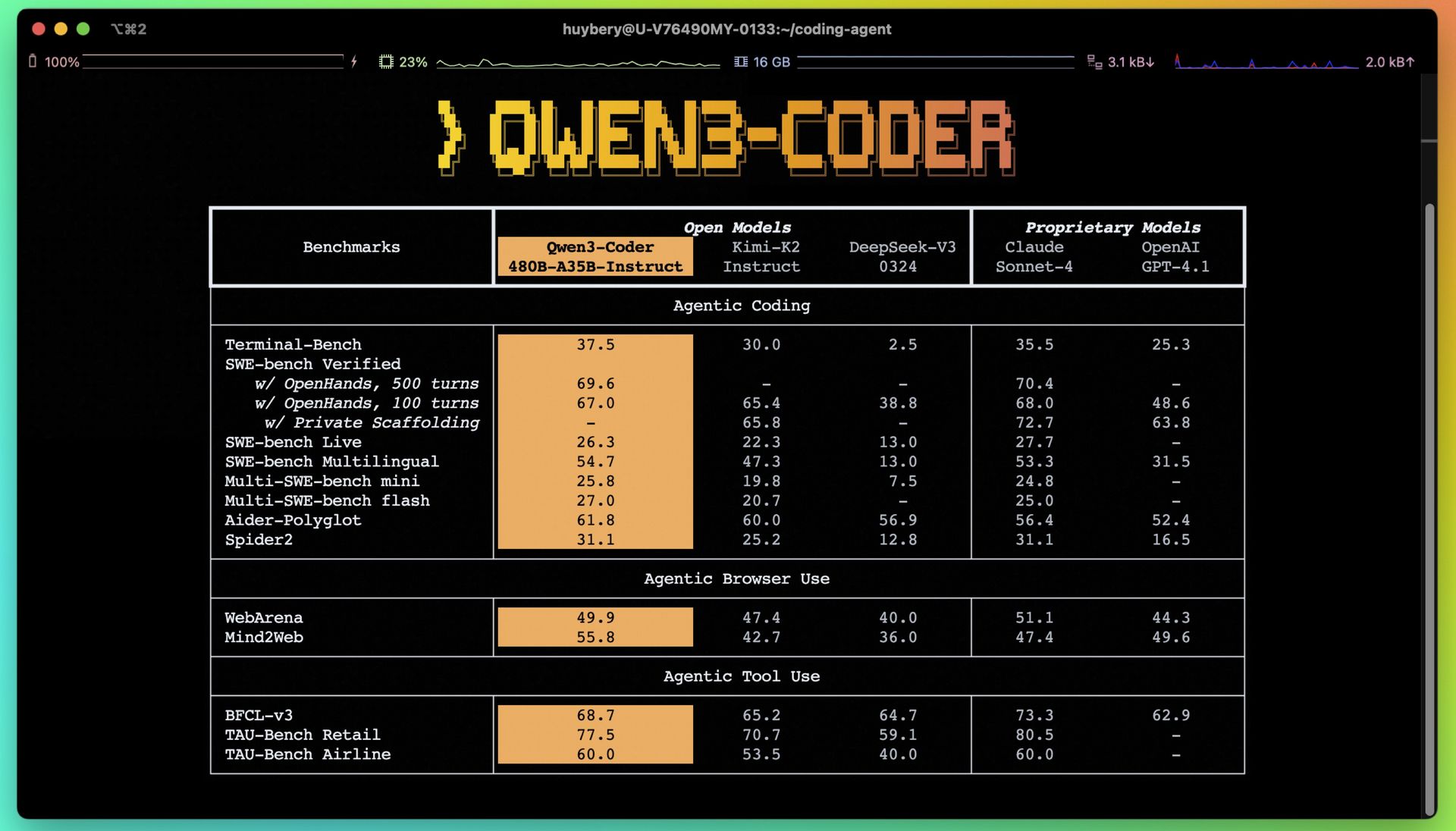

Chinese opensource AI is on a roll! This new Claude 4-level coding model outperforms DeepSeek R1, Kimi k2, Gemini 2.5 Pro, and OpenAI GPT-4.1.

Qwen3-Coder, the latest release from Alibaba's research team, is a 480B parameter coding specialist with 35B active parameters.

Qwen3-Coder was specifically designed for agentic multi-step coding scenarios where it needs to plan, execute, get feedback, and adapt its approach. The breakthrough comes from training the model in 20,000 parallel simulated environments where it learned to use real developer tools, handle debugging, and manage complex repository-scale projects.

The team has also released Qwen Code, an opensource command-line tool forked from Gemini Code, adapted with customized prompts and function calling protocols to fully unleash the capabilities of Qwen3-Coder on agentic coding tasks.

Key Highlights:

Truly Agentic - Beyond code generation, the model handles complex multi-turn software engineering workflows including planning, tool usage, debugging, and iterative problem-solving through reinforcement learning training.

Massive Context - The model handles context windows up to 256K tokens natively and can stretch to 1M tokens, meaning it can actually understand and work with entire codebases rather than just code snippets.

Dev Ecosystem - Ships with Qwen Code CLI and integrates directly with Claude Code, Cline, and other popular tools through standardized APIs.

Open Source - Available under Apache 2.0 license with full model weights downloadable from Hugging Face and ModelScope, plus immediate API access through Alibaba Cloud's DashScope platform with OpenAI-compatible endpoints.

Switching between LLM providers shouldn't require rewriting your entire codebase just to test if Claude works better than GPT-4o for your specific use case.

Mozilla AI just dropped any-llm, an opensource Python library that lets you swap between 20+ providers with nothing more than a string change - update "openai/gpt-4o" to "anthropic/claude-sonnet-4" and you're done.

Unlike heavyweight solutions that reimplement provider APIs or require proxy servers, any-llm uses official SDKs directly, eliminating compatibility headaches and keeping your setup lightweight. The library normalizes all responses to OpenAI's ChatCompletion format, so your code stays consistent regardless of which provider runs underneath. Whether you're prototyping locally or deploying to production, you get the same clean interface without vendor lock-in or architectural complexity.

Key Highlights:

Provider agnostic switching - Change models with a single string parameter while keeping all your existing code intact, supporting 20+ providers including OpenAI, Anthropic, Google, Mistral, and AWS Bedrock.

Official SDK integration - Uses provider-maintained SDKs instead of reimplementing APIs, reducing maintenance overhead and ensuring compatibility as providers update their services.

No infrastructure overhead - Skip proxy servers and gateway services entirely - just pip install, import, and start using any provider directly from your Python environment.

Consistent response format - All provider responses get normalized to OpenAI ChatCompletion objects with full Pydantic typing, making integration predictable across different models and providers.

Quick Bites

Google Gemini 2.5 Flash-Lite is now generally available with pricing that undercuts most competitors at $0.10/$0.40 per million tokens while preserving native reasoning and the full million-token context. Google's bet here is clear: developers want the 2.5 architecture's capabilities without the premium price tag, especially for classification and translation workloads. The model completes their 2.5 family lineup, giving teams three distinct options based on their speed-cost-intelligence priorities.

Menlo Research's new Lucy agentic-search model proves that bigger isn't always better. This 1.7B parameter agent can Google search for you while running entirely on your phone. The model uses machine-generated task vectors and smooth multi-category rewards to optimize its <think></think> reasoning for targeted search tasks. At 78.3 on SimpleQA + MCP, Lucy punches well above its weight class, delivering near Jan-Nano-4B performance in a pocket-sized package.

GitHub Copilot now comes with GitHub Spark, an agentic tool to vibe code full-stack apps from a single prompt. Powered by Claude Sonnet 4, Spark handles everything from frontend to backend, data storage to AI integrations, with zero configuration needed. One prompt gets you a working app, one prompt lets you edit it, one click gets it deployed, and seamless GitHub integration means you can expand into full development workflows whenever you're ready. Available in public preview for Copilot Pro+ users.

Tools of the Trade

Code Sandbox MCP: A self-hosted MCP server that enables AI agents to execute Python and JavaScript code in Docker/Podman containers on your local infrastructure. Handles the complete workflow from creating container to output capture.

Inbound: An email management API that lets you programmatically create email addresses and route incoming messages to AI agents, webhooks, or forwarding destinations. It provides a TypeScript SDK for handling email responses and parsing.

AI File Sorter: Uses AI to automatically categorize and organize files based on their names and extensions. You can review the AI-suggested categories before confirming the sorting operation, and the app creates necessary folders and moves files accordingly.

Dry: A shared memory system for Claude, ChatGPT, and any MCP client that lets you define custom data types and automatically generates graphical interfaces for your structured data.

Awesome LLM Apps: Build awesome LLM apps with RAG, AI agents, MCP, and more to interact with data sources like GitHub, Gmail, PDFs, and YouTube videos, and automate complex work.

Hot Takes

Heard GPT-5 is imminent, from a little bird.

- It’s not one model, but multiple models. It has a router that switches between reasoning, non-reasoning, and tool-using models.

- That’s why Sam said they’d “fix model naming”: prompts will just auto-route to the right model.

- GPT-6 is in training.

I just hope they’re not delaying it for more safety tests. 🙂 ~

Yuchen JinMy bar for AGI is an AI winning a Nobel Prize for a new theory it originated. ~

Thomas Wolf

That’s all for today! See you tomorrow with more such AI-filled content.

Don’t forget to share this newsletter on your social channels and tag Unwind AI to support us!

PS: We curate this AI newsletter every day for FREE, your support is what keeps us going. If you find value in what you read, share it with at least one, two (or 20) of your friends 😉

Reply