- unwind ai

- Posts

- Open-Source Solution to Context Rot in AI Agents

Open-Source Solution to Context Rot in AI Agents

+ DeepSeek V3.2 reaches Gemini 3 and Claude Sonnet 4.5 performance

Today’s top AI Highlights:

& so much more!

Read time: 3 mins

AI Tutorial

After 1.5M+ developers joined the Kaggle AI Agents Course, Google is launching Advent of Agents 2025.

25 days of hands-on agent development. One feature per day, under 5 minutes to implement.

What you get daily:

⚡ A new agent capability

💻 Copy-paste code that works

📖 Direct links to documentation

🎯 Less than 5 minutes per feature

The tech stack:

↳ Gemini 3 with context engineering and Computer Use capabilities

↳ Google Agent Development Kit (ADK) for Python

↳ Vertex AI Agent Engine for rapid deployment

↳ Production-ready templates and starter packs

How it works:

The hub unlocks new content daily. Each feature builds on the previous one, taking developers from quickstart agents to multi-agent orchestration and production deployment.

Recommended starting point:

"Introduction to Agents" whitepaper from the 5 Days of Agents with Kaggle series.

Advent of Agents 2025 starts now!

We share hands-on tutorials like this every week, designed to help you stay ahead in the world of AI. If you're serious about leveling up your AI skills and staying ahead of the curve, subscribe now and be the first to access our latest tutorials.

Latest Developments

LLMs and AI agents have a context problem, and current memory solutions aren’t sufficient.

Memory systems compress everything into summaries, vector databases lose semantic nuances, and naive context windows just keep growing until reasoning ability collapses.

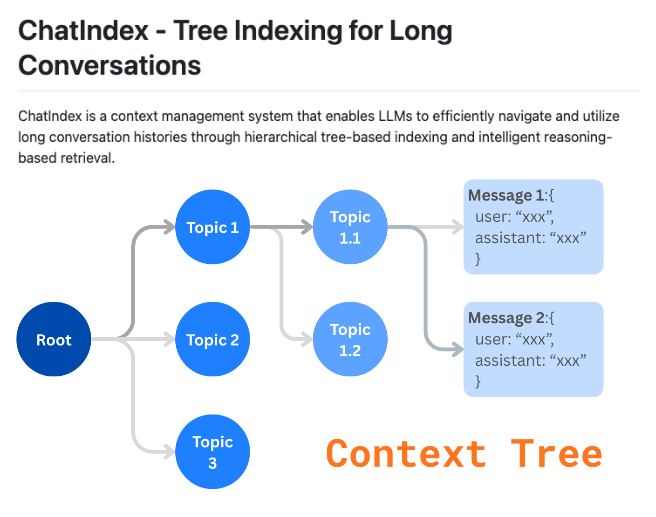

Enter ChatIndex, an open-source framework that treats conversations like structured documents instead of flat message lists. It constructs a Context Tree where your actual conversation sits at the leaf nodes while topic hierarchies organize everything above. Think of it as adding a table of contents to your chat history - higher nodes represent broad themes, lower nodes contain specific details, and the raw messages remain accessible when you need them. The retrieval phase is where it gets interesting: an LLM performs top-down tree traversal, evaluating at each node whether the summary provides sufficient information or if it needs to dig deeper. This adaptive approach means you're not feeding your entire conversation history into every query, just the relevant branches.

Key Highlights:

Multi-Resolution Access - The system returns information at variable detail levels, stopping at high-level summaries when appropriate or drilling down to raw messages when precision is required, reducing token costs without sacrificing completeness.

Lossless Preservation - Unlike memory-based architectures that store only compressed summaries, ChatIndex maintains the complete original conversation while layering a navigable topic structure on top.

B+ Tree Architecture - Borrows the efficient hierarchical structure from database indexing but replaces key-based lookups with contextual relevance judgments, keeping the tree shallow through bounded fan-out.

Complete Python Toolkit - Install via pip, configure API keys for OpenAI (building) and Anthropic (querying), then index conversations and query them with just a few lines of code. This also includes streaming responses and direct tool access for custom workflows.

Earn a master's in AI for under $2,500

AI skills aren’t optional anymore—they’re a requirement for staying competitive. Now you can earn a Master of Science in Artificial Intelligence, delivered by the Udacity Institute of AI and Technology and awarded by Woolf, an accredited higher education institution.

During Black Friday, you can lock in the savings to earn this fully accredited master’s degree for less than $2,500. Build deep expertise in modern AI, machine learning, generative models, and production deployment—on your own schedule, with real projects that prove your skills.

This offer won’t last, and it’s the most affordable way to get graduate-level training that actually moves your career forward.

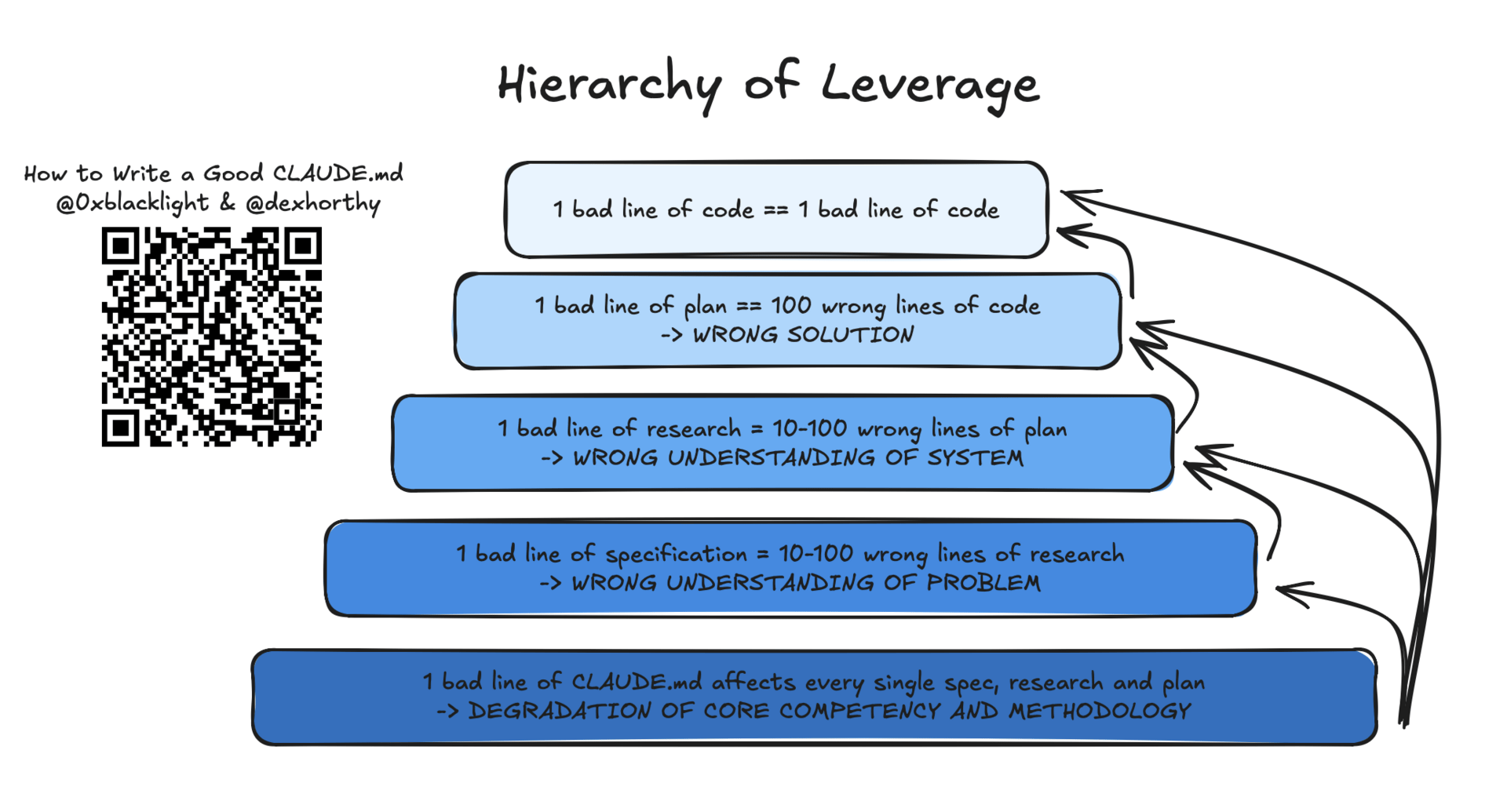

You write the most comprehensive CLAUDE.md file you could to give to Claude Code, but it keeps ignoring the instructions you carefully wrote.

Turns out, that's actually by design! Anthropic deliberately tells Claude to skip your file if it decides the content isn't relevant to the current task.

HumanLayer has published a very insightful guide on why most CLAUDE.md files fail and how to write one that actually works.

The core issue is that developers treat these files like configuration dumps, stuffing in every possible command, style guideline, and edge case instruction. But research shows LLMs can only reliably follow 150-200 instructions before performance degrades, and Claude Code's system prompt already uses up about 50 of those slots.

Here are some principles you need to know to write a good Claude.md:

The Stateless Reality - LLMs know nothing about your codebase at the start of each session, and Claude Code adds a system reminder telling Claude to ignore CLAUDE.md content unless it's highly relevant, making bloated instruction files counterproductive.

Progressive Disclosure Pattern - Instead of cramming everything into CLAUDE.md, store task-specific instructions in separate markdown files (like

building_the_project.mdordatabase_schema.md) and let Claude decide which ones to read when needed.Skip the Linter Role - Code style guidelines eat up instruction capacity and context window space for marginal benefit. LLMs naturally learn patterns from existing code, and deterministic tools handle formatting far better than expensive API calls.

The 60-Line Benchmark - HumanLayer's production CLAUDE.md file stays under 60 lines by focusing on broadly applicable onboarding information and delegating task-specific details to separate markdown files with descriptive names.

All these are also applicable to AGENTS.md, the open-source equivalent of CLAUDE.md for agents and harnesses like OpenCode, Zed, Cursor, and Codex.

Quick Bites

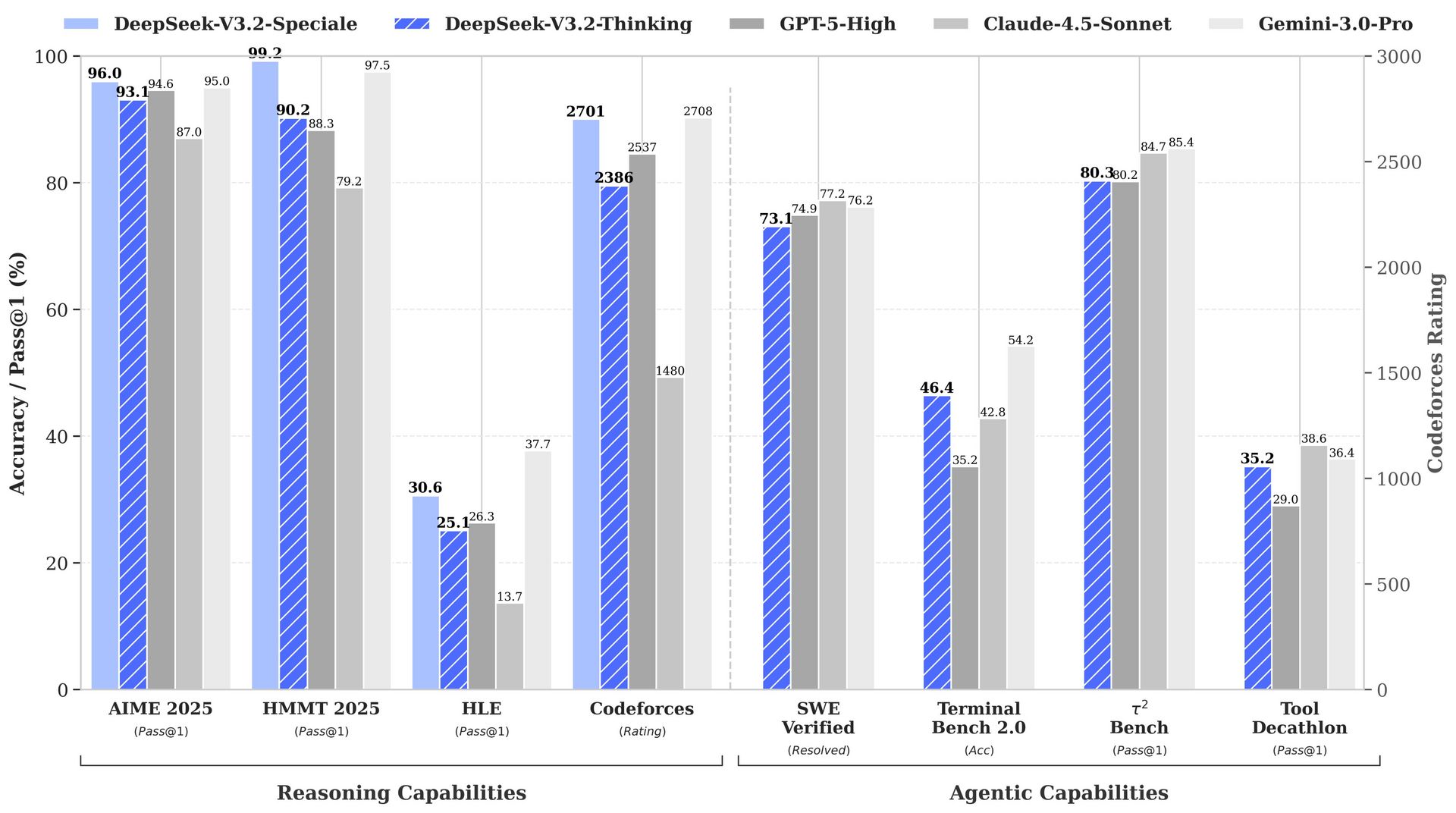

Silicon Valley might be getting DeepSeek'd for the second time. Just one week after Google dropped Gemini 3.0 Pro, DeepSeek released V3.2 that matches GPT-5 and rivals Gemini 3.0 Pro and Claude Sonnet 4.5. At more than 10x lower cost and fully open-sourced under MIT license. The Chinese lab also released V3.2-Speciale (API-only), which earned gold medals in IMO, ICPC World Finals, and IOI 2025. DeepSeek V3.2 model weights are available on Hugging Face under MIT license. Both models are available via API.

Use Agent Skills with Deep Agents

LangChain just added Anthropic's skills pattern to their deepagents-CLI, letting agents load instructions dynamically instead of cramming everything into context upfront. The approach is simple: rather than defining dozens of specialized tools, you give agents access to a computer with folders of markdown files containing task-specific instructions. It's a cleaner way to scale agent capabilities. You get token efficiency through progressive disclosure and reduced cognitive load from fewer atomic tools.

Editable and designer-level visuals with Kimi K2 and Nano Banana Pro

Moonshot AI just dropped Kimi Agentic Slides and it’s the cleanest file-to-presentation tool we’ve seen yet: PDFs, images, or plain text in → designer-grade slides with charts and infographics out. Kimi K2 handles the reasoning and text generation while Nano Banana Pro does the heavy lifting for visuals, infographics, and illustrations. If you’ve ever dreaded making presentations, this one’s worth a spin.

Mistral ships complete 3-series: Large 3 + Edge models

Mistral dropped their full 3-series lineup today: Mistral Large 3 (a 675B sparse MoE hitting #2 on LMArena's OSS leaderboard) plus the Ministral edge models at 14B, 8B, and 3B, all with native vision and multilingual support. Everything ships Apache 2.0, including base and instruct versions, with reasoning variants coming for the smaller models (the 14B reasoning version already scores 85% on AIME '25). They're working with NVIDIA, vLLM, and Red Hat to optimize inference. Large 3 runs on a single 8×H100 node in FP4 format, making it genuinely accessible for self-hosting.

Anthropic buys Bun to power Claude Code infrastructure

Anthropic bought Bun to power Claude Code's backend after the agentic coding tool reached $1B run-rate in six months. The logic is straightforward: Claude Code ships as a Bun executable to millions of users, and when AI agents generate exponentially more code, the runtime layer becomes critical infrastructure. Bun remains open-source while gaining resources to optimize for AI-driven development workflows.

Tools of the Trade

Superset - Run 10+ CLI coding agents like Claude Code, Codex, and more in parallel on your machine. For each task, Superset uses git worktrees to clone a new branch on your machine. Automate copying env variables, installing dependencies, etc., through a config file. Each workspace gets its own organized terminal system.

CodeViz - A VS Code extension that generates interactive visual maps of your codebase using LLMs, letting you navigate through architecture and function calls by clicking nodes. You can even ask questions about your code in plain English, get focused diagrams visualizing exactly what you asked about

Better Agents - A CLI tool that configures coding agents (Claude Code, Cursor, Kilocode) with AI agent framework-specific expertise and production-ready project structures. It generates a standardized setup with scenario tests, evaluation notebooks, versioned prompts, and MCP configurations to ensure agent projects follow best practices from the start.

Awesome LLM Apps - A curated collection of LLM apps with RAG, AI Agents, multi-agent teams, MCP, voice agents, and more. The apps use models from OpenAI, Anthropic, Google, and open-source models like DeepSeek, Qwen, and Llama that you can run locally on your computer.

(Now accepting GitHub sponsorships)

Hot Takes

That’s all for today! See you tomorrow with more such AI-filled content.

Don’t forget to share this newsletter on your social channels and tag Unwind AI to support us!

PS: We curate this AI newsletter every day for FREE, your support is what keeps us going. If you find value in what you read, share it with at least one, two (or 20) of your friends 😉

Reply