- unwind ai

- Posts

- OpenAI Just Won Gold at International Math Olympiad

OpenAI Just Won Gold at International Math Olympiad

PLUS: Opensource alternative to xAI’s waifu, Write AI agents in Python once - use in any language

Today’s top AI Highlights:

OpenAI’s model earns gold at the world's toughest math competition

Write AI agents in Python once, use them in any language

Opensource alternative to xAI’s waifu

Mistral releases Deep Research, Voice Mode, Projects for free

Build your own AI self - 100% local and opensource

& so much more!

Read time: 3 mins

AI Tutorial

Business consulting has always required deep market knowledge, strategic thinking, and the ability to synthesize complex information into actionable recommendations. Today's fast-paced business environment demands even more - real-time insights, data-driven strategies, and rapid response to market changes.

In this tutorial, we'll create a powerful AI business consultant using Google's Agent Development Kit (ADK) combined with Perplexity AI for real-time web research. This consultant will conduct market analysis, assess risks, and generate strategic recommendations backed by current data, all through a clean, interactive web interface.

We share hands-on tutorials like this every week, designed to help you stay ahead in the world of AI. If you're serious about leveling up your AI skills and staying ahead of the curve, subscribe now and be the first to access our latest tutorials.

Latest Developments

OpenAI just delivered the most significant AI reasoning breakthrough since GPT-4, with their experimental model achieving gold medal performance on the 2025 International Mathematical Olympiad.

This isn't just another benchmark milestone. It's the first time an AI has matched elite human mathematicians on problems requiring sustained creative thinking over 100+ minutes each. The model solved 5 out of 6 IMO problems, earning 35 out of 42 points under the exact same constraints as human contestants: no internet, no external tools, just pure mathematical reasoning through natural language proofs.

Another reason this is a significant feat is that it came about through advances in general-purpose reinforcement learning and test-time compute scaling, rather than narrow mathematical fine-tuning or theorem-proving systems.

Key Highlights:

Authentic Competition Conditions - The model operated under official IMO rules with two 4.5-hour exam sessions, reading problem statements directly and generating complete natural language proofs without any external tools, web access, or specialized mathematical software.

Creative Reasoning - IMO problems demand 100+ minutes of sustained creative thinking each, representing a massive leap from benchmarks like GSM8K (~0.1 minutes) and MATH (~1 minute) to genuine mathematical research-level reasoning that requires novel problem-solving approaches.

No Model Details - This is an experimental model separate from the upcoming GPT-5 release and won't be available publicly for several months. OpenAI emphasized that they are pushing toward general intelligence, emerging from their core reasoning research rather than domain-specific optimization.

This achievement comes with a fair share of criticism for OpenAI. Reportedly, the IMO organizers asked AI companies to wait a week after the closing ceremony to let the human competitors have their moment. And yes, rumors suggest Google DeepMind's model also achieved gold, but they're staying quiet out of respect.

But look, this is 2025, not 2015. In today's AI race, being first to market with the narrative matters as much as the technical achievement itself. OpenAI understood that controlling the story means everything - they've built their entire brand on these watershed moments. Whether it's "rude" or strategic, they just claimed the mathematical reasoning crown in the public consciousness, and that's worth more than any amount of academic courtesy.

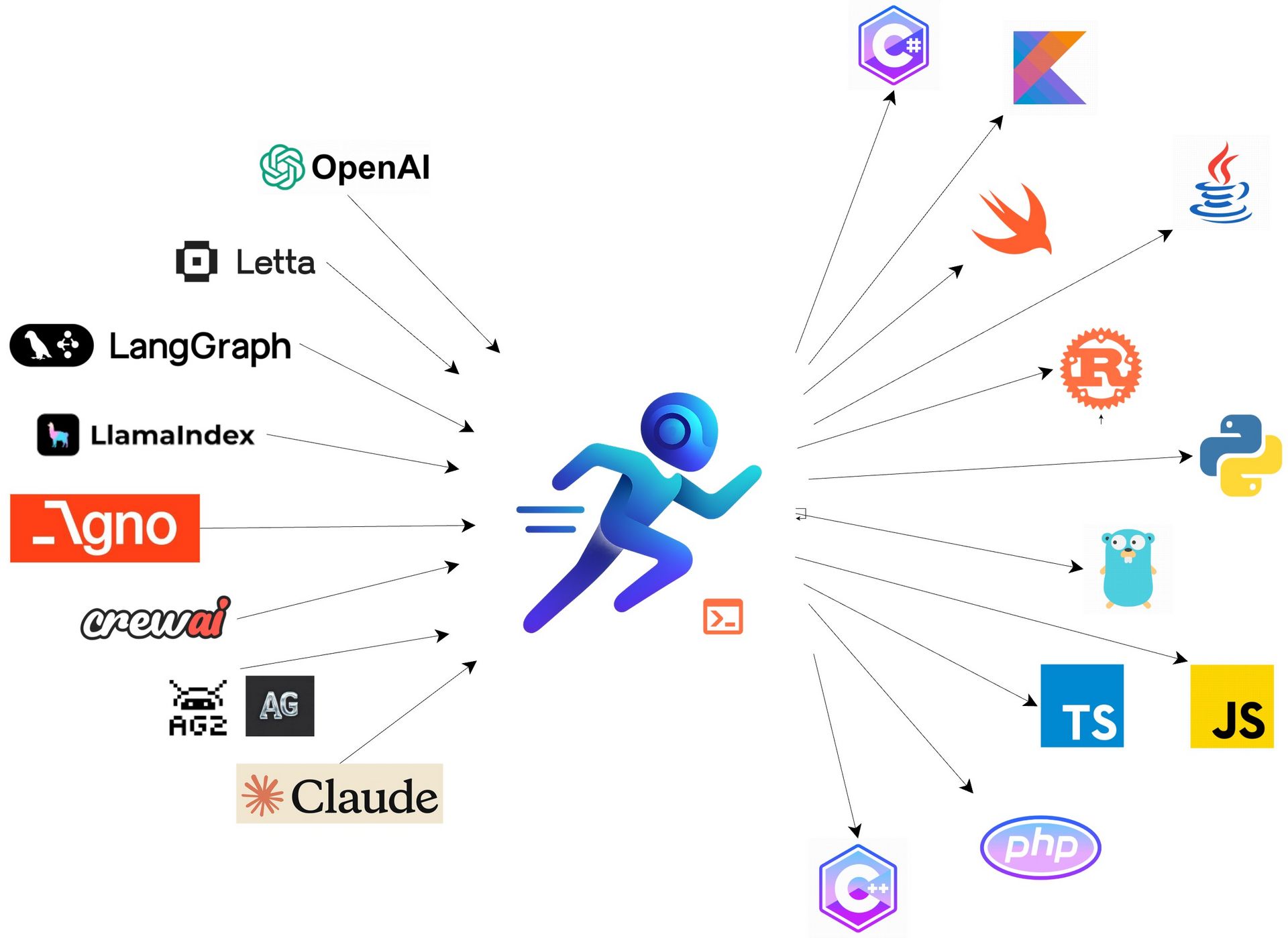

Your team's brilliant Python AI agent works perfectly, but your frontend developers need it in JavaScript, your systems team wants Rust access, and your mobile team requires Go integration.

You either force everyone to work in Python (impossible) or recreate the agent logic in multiple languages (nightmare).

RunAgent solves this by turning any Python AI agent into native function calls across programming languages. Write your agent once in Python using any framework, deploy with a single command, and access it naturally from JavaScript, Rust, Go, or anywhere else through comprehensive SDKs. The platform handles all the networking, authentication, streaming, and error handling automatically.

Key Highlights:

Native Cross-Language - Deploy Python agents once and call them like local functions in JavaScript, Rust, Go, or any language through idiomatic SDKs with automatic authentication and error handling.

Framework Agnostic - Works with LangChain, LangGraph, CrewAI, AutoGen, or custom Python frameworks through standardized configurations without requiring agent code changes.

Development to Production - Local FastAPI server with hot reload for testing transitions seamlessly to serverless cloud deployment with auto-scaling and global distribution.

Real-Time Streaming Support - Native streaming responses work naturally across all programming languages with language-specific iterators and comprehensive retry logic.

Quick Bites

Mistral just dropped Deep Research, Voice Mode, Projects, and advanced image editing (in partnership with Black Forest Labs) in Le Chat platform. You might think that Mistral’s just trying to catch up with other platforms like ChatGPT, Gemini, Claudem, and Grok. But here’s the kicker - all these features are available for free users (with unspecified limits). The company is clearly making a play for broader adoption.

Someone decided that paying $30/month for Musk's anime girlfriend wasn't quite indie enough. Project AIRI is an opensource alternative to xAI’s waifu Ani, giving you complete ownership of your AI companion. This opensource framework lets you run AI VTubers locally in your browser, complete with Live2D avatars, and the ability to actually play Minecraft and Factorio alongside you. AIRI runs entirely on your hardware with support for 20+ LLM providers, persistent memory systems, and real-time voice synthesis via ElevenLabs.

Context engineering is quietly eating prompt engineering, and teams building production AI agents are discovering that the quality of information architecture matters far more than prompting tricks. Manus AI, serving millions of users, just shared their hard-won lessons from rebuilding their agent framework four times, revealing that most agent failures aren't model failures, they're context failures. For builders serious about production-ready agents, these insights cut through the noise with practical wisdom earned through real-world scale.

Design around KV-cache hit rates - Keep prompt prefixes stable and contexts append-only; cached tokens cost 10x less than uncached ones

Mask tools, don't remove them - Use response prefill and logit masking to constrain action selection without breaking cache or confusing the model

Treat file systems as unlimited context - Let agents externalize memory through files rather than cramming everything into limited context windows

Leave failures in context - Don't clean up error traces; models learn better error recovery when they see their mistakes

Tools of the Trade

Second Me: Create your own AI self - personalized AI agents trained locally on your data to act on your behalf across different platforms. These agents learn about your unique thinking patterns, represent you, form collaborative networks with other Second Mes, and create new value.

Presenton: Opensource alternative to Gamma. This AI presentation generator runs locally on your device, supporting models like OpenAI, Gemini, and Ollama for creating slides from prompts and outlines.

ChessArena: Opensource chess battlefield where LLMs like GPT, Claude, and Gemini face off. Built with Motia Framework, it measures move quality against Stockfish's perfect play rather than counting wins since LLMs are terrible at understanding chess.

Context42: Analyzes your codebase, extracts coding patterns, and converts them into formal style guides. It examines your repository's actual conventions and generates documentation that new team members can follow to match your established practices.

Awesome LLM Apps: Build awesome LLM apps with RAG, AI agents, MCP, and more to interact with data sources like GitHub, Gmail, PDFs, and YouTube videos, and automate complex work.

Hot Takes

That’s why focus is overrated.

Lovable started as a weekend project (like most successful startups).

Go viral, then focus.

I’ve almost never seen a startup take off when nothing happened for months after launch.

Focusing on the wrong project leads to burnout (been there).

Make more small bets and let the market decide when it’s time to focus.

Massive congrats to Anton and the Lovable team! ~

Marc Louif you are a big company (or even small) you're likely going to buy gpt or claude from amazon microsoft or google

how crazy is it they maneuvered that ~

daxChatGPT is now better at math than almost all humans, an incredible accomplishment. So it's interesting that it's still worse at writing cold emails than the average founder. ~

Jared Friedman

That’s all for today! See you tomorrow with more such AI-filled content.

Don’t forget to share this newsletter on your social channels and tag Unwind AI to support us!

PS: We curate this AI newsletter every day for FREE, your support is what keeps us going. If you find value in what you read, share it with at least one, two (or 20) of your friends 😉

Reply