- unwind ai

- Posts

- Opensource Agentic Coding Model from China

Opensource Agentic Coding Model from China

PLUS: Is OpenAI testing GPT-5 in stealth? | Video Overviews Google NotebookLM

Today’s top AI Highlights:

Connect any LLM to any MCP server in 6 lines of code

China's new opensource model at par with Claude Sonnet 4

Microsoft research says these 40 jobs are at risk from AI

Is OpenAI testing GPT-5 or an opensource model in stealth?

Auto-kill AI agents loop before they rack up $2000 in API bills

& so much more!

Read time: 3 mins

AI Tutorial

Integrating travel services as a developer often means wrestling with a patchwork of inconsistent APIs. Each API - whether for maps, weather, bookings, or calendars - brings its own implementations, auth, and maintenance burdens. The travel industry's fragmented tech landscape creates unnecessary complexity that distracts from building great user experiences.

In this tutorial, we’ll build a multi-agent AI travel planner using MCP servers as universal connectors. By using MCP as a standardized layer, we can focus on creating intelligent agent behaviors rather than API-specific quirks. Our application will orchestrate specialized AI agents that handle different aspects of travel planning while using external services via MCP.

We'll use the Agno framework to create a team of specialized AI agents that collaborate to create comprehensive travel plans, with each agent handling a specific aspect of travel planning - maps, weather, accommodations, and calendar events.

We share hands-on tutorials like this every week, designed to help you stay ahead in the world of AI. If you're serious about leveling up your AI skills and staying ahead of the curve, subscribe now and be the first to access our latest tutorials.

Latest Developments

MCP servers are powerful, but being limited to using them only through Claude Desktop or Cursor feels like being handed a Ferrari and told you can only drive it in a parking lot.

The official SDK comes with enough boilerplate to make even seasoned developers question their life choices.

Enter mcp-use, an opensource library that cuts through the complexity and lets you connect any LLM to any MCP server in just 6 lines of code. Built by developers who got tired of wrestling with double async loops and verbose configurations, this tool provides a clean abstraction over the official MCP SDK while supporting the full protocol functionality. The library introduces two key components: MCPClient for handling server connections and transport detection, and MCPAgent for combining LLMs with MCP capabilities through a simple, unified interface.

Key Highlights:

Custom Agents and LLMs - Build custom agents that can utilize MCP servers with the LangChain adapter. It works with any LangChain-supported model from OpenAI and Anthropic to Groq and local Llama models.

Multi-server orchestration - Connect to multiple MCP servers simultaneously and let the built-in Server Manager intelligently route tasks to the most appropriate server, reducing context flooding and improving agent efficiency.

Security - Includes sandboxed execution through E2B cloud infrastructure, tool access restrictions, and secure authentication handling, so you can deploy agents without worrying about system vulnerabilities.

Feedback and monitoring - Stream agent output as it happens with async streaming support, plus comprehensive logging and debugging tools that track every decision and action for easy troubleshooting.

Practical AI for Business Leaders

The AI Report is the #1 daily read for professionals who want to lead with AI, not get left behind.

You’ll get clear, jargon-free insights you can apply across your business—without needing to be technical.

400,000+ leaders are already subscribed.

👉 Join now and work smarter with AI.

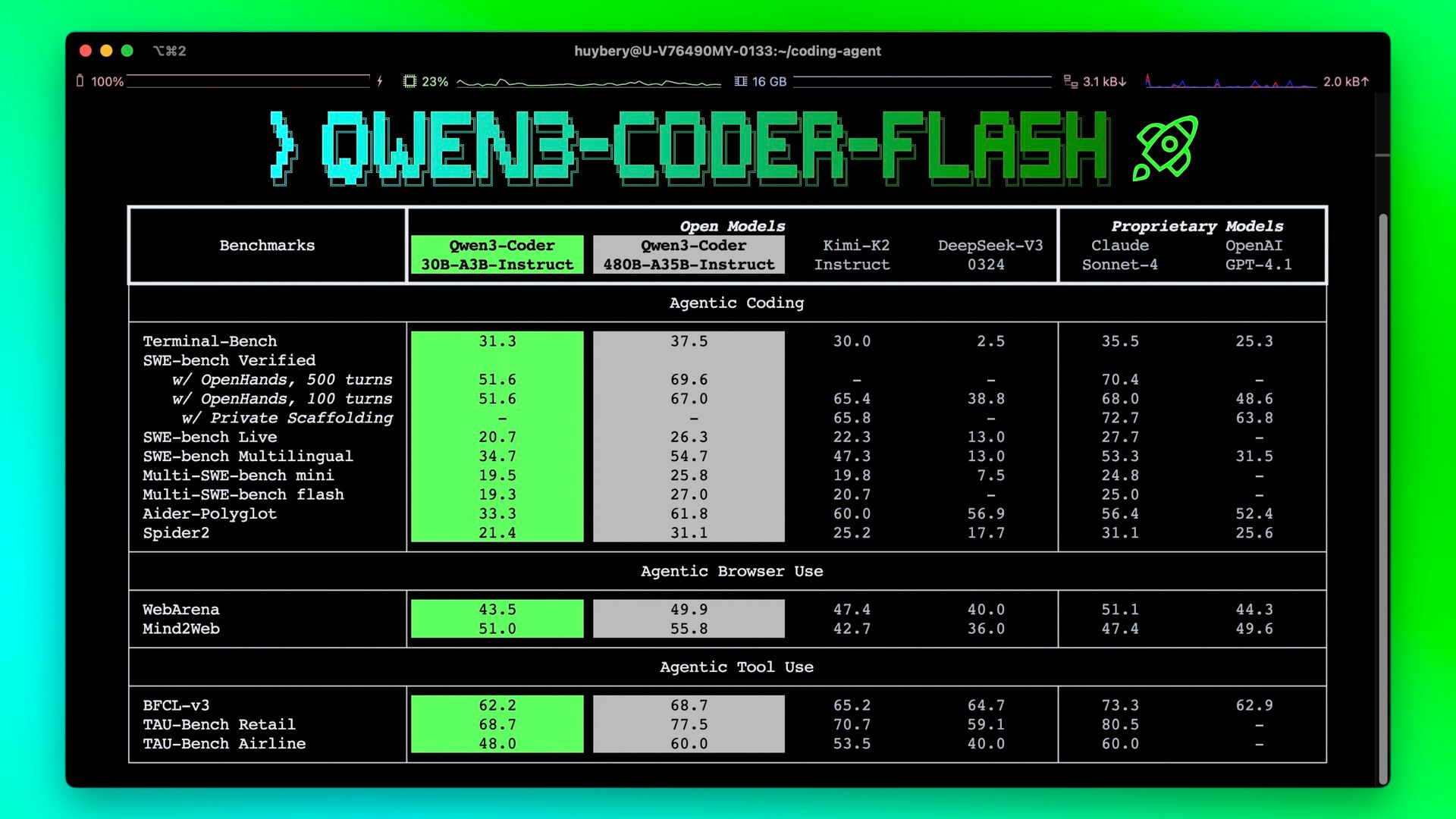

China’s Alibaba just dropped another opensource code model that matches Claude Sonnet 4's performance while running locally on consumer hardware.

Qwen3-Coder-Flash brings agentic coding capabilities to a 30B parameter model that needs just 33GB of RAM, making it accessible to developers who can't afford massive cloud bills or don't want to send their code to third-party APIs.

The model achieves impressive performance on coding benchmarks like SWE-bench Verified, WebArena, and BFCL (for agentic tool-use), putting it neck-and-neck with state-of-the-art models like Claude Sonnet 4, GPT 4.1, and Kimi k2. Built with a Mixture-of-Experts architecture using 128 experts with 8 activated per inference, Qwen3-Coder-Flash supports 256K tokens natively and extends to 1M with YaRN for repository-scale understanding. Unlike the massive 480B variant released last week, this "Flash" version democratizes access while maintaining the same agentic capabilities and tool integration that made its bigger sibling famous.

Key Highlights:

Local Powerhouse - Runs in full precision with just 33GB RAM while delivering performance comparable to Claude Sonnet 4 on agentic coding tasks, making enterprise-level AI accessible without cloud dependencies or massive hardware investments.

Competitive Results - Achieves 51.6% on SWE-bench Verified and strong scores across agentic browser use (51.0% on Mind2Web) and tool use benchmarks, proving that smaller models can punch above their weight when properly optimized.

Architecture - Features 128 experts with only 8 activated per inference, providing 30B total parameters with 3.3B active, plus native 256K context that extends to 1M tokens for handling entire codebases and complex workflows.

Availability - Works seamlessly with Qwen Code CLI, Cline, Kiro Code, and other popular developer tools through standardized APIs. The model is also available on Hugging Face and Modelscope, completely opensource under Apache 2.0 for unrestricted use.

Quick Bites

Ollama just shipped a desktop app that puts local LLM chat behind a clean GUI instead of terminal commands. The macOS and Windows app supports drag-and-drop for files and PDFs, configurable context lengths, and multimodal capabilities with models like Gemma 3. CLI purists can still grab standalone versions from GitHub.

Microsoft did a study on occupations that are most affected by AI, and the results are honestly unexpected. Interpreters and translators are sitting at 98% AI overlap, while jobs like dishwashers and roofers clock in at 0. The team analyzed 200,000 real Bing Copilot conversations to find the most common work activities people seek AI assistance for and ranked which jobs AI impacts. What's wild is that your salary has almost nothing to do with your AI risk, completely flipping the narrative that high earners should be worried.

Google's NotebookLM can now generate Video Overviews from your uploaded documents, creating narrated slides with visuals, diagrams, and data visualizations extracted from source material. You can customize these videos by specifying topics to focus on, indicating your learning goals, describing the target audience, and more.

OpenRouter just dropped Horizon Alpha, a new stealth general-purpose model optimized for long-context tasks, coding, content creation, and conversation, cloaked to gather unbiased community feedback. It’s been just over 24 hours, and the AI Twitter is giving it rave reviews, especially in game and UI generation, placing it at par with Gemini 2.5 Pro and Claude 4. The model clocked a speed of 130-150 tokens/second, suggesting that it’s a non-thinking model. It's completely free during the feedback period.

Mintlify just released hosted MCP servers baked into your docs - they made every documentation site on their platform instantly consumable by AI agents through automatic MCP server generation. Hit any Mintlify docs URL with /mcp, and you get a fully functional MCP server that AI applications can interact with. The servers come pre-configured with search functionality and can expose API endpoints as callable tools through OpenAPI integration.

Tools of the Trade

Kombai: AI agent purpose-built for frontend dev tasks. It lets you generate, run, and save high-quality frontend code through Figma, image, and text inputs. Significantly outperforms frontier models like Claude Sonnet 4 and Gemini 2.5 Pro in building UX with agents and MCP tools.

Exa Fast: The fastest search API that achieves average response times below 425ms. The service crawls and indexes web content directly instead of relying on third-party search engines. It’s 30%+ faster than Brave and Google Serp.

AgentGuard: Your AI agent hits an infinite loop and racks up $2000 in API charges overnight. This tool monitors AI API costs in real-time and kills processes that exceed budget limits before this happens. Integrates with just 2 lines of code and tracks token usage across major AI providers.

Awesome LLM Apps: Build awesome LLM apps with RAG, AI agents, MCP, and more to interact with data sources like GitHub, Gmail, PDFs, and YouTube videos, and automate complex work.

Hot Takes

All the technical language around AI obscures the fact that there are two paths to being good with AI:

1) Deeply understanding LLMs

2) Deeply understanding how you give people instructions & information they can act on.

LLMs aren’t people but they operate enough like it to work ~

Ethan MollickNot a single person from Thinky or Anthropic took Zuck’s $200M–$1B offer. Why?

1. Already rich

2. Mission > Mansion

3. they've seen AGI!

4. hate big corp life

4. upside is higher

6. value of money is diminishing post AGI

7. no faith in Meta or Zuck ~

Yuchen Jin

That’s all for today! See you tomorrow with more such AI-filled content.

Don’t forget to share this newsletter on your social channels and tag Unwind AI to support us!

PS: We curate this AI newsletter every day for FREE, your support is what keeps us going. If you find value in what you read, share it with at least one, two (or 20) of your friends 😉

Reply