- unwind ai

- Posts

- Train AI Agents with RL (No Code Changes)

Train AI Agents with RL (No Code Changes)

+ Mistral launches AI Studio for end-to-end AI ops

Today’s top AI Highlights:

& so much more!

Read time: 3 mins

AI Tutorial

SEO optimization is both critical and time-consuming for teams building businesses. Manually auditing pages, researching competitors, and synthesizing actionable recommendations can eat up hours that you'd rather spend strategizing.

In this tutorial, we'll build an AI SEO Audit Team using Google's Agent Development Kit (ADK) and Gemini 2.5 Flash. This multi-agent system autonomously crawls any webpage, researches live search results, and delivers a polished optimization report through a clean web interface that traces every step of the workflow.

We share hands-on tutorials like this every week, designed to help you stay ahead in the world of AI. If you're serious about leveling up your AI skills and staying ahead of the curve, subscribe now and be the first to access our latest tutorials.

Latest Developments

Your AI agent works great in demos but falls apart with multi-turn coding workflows, private datasets, and unfamiliar tools. Prompt engineering is unreliable and only takes you so far.

But here's the insight: treat agent execution as something you can actually optimize. And this makes reinforcement learning a sensible approach. Supervised learning demands detailed annotations for every step of every task, which is absurdly expensive for interactive scenarios. RL only needs outcome signals - task solved or failed - and learns desirable behaviors directly from environment feedback.

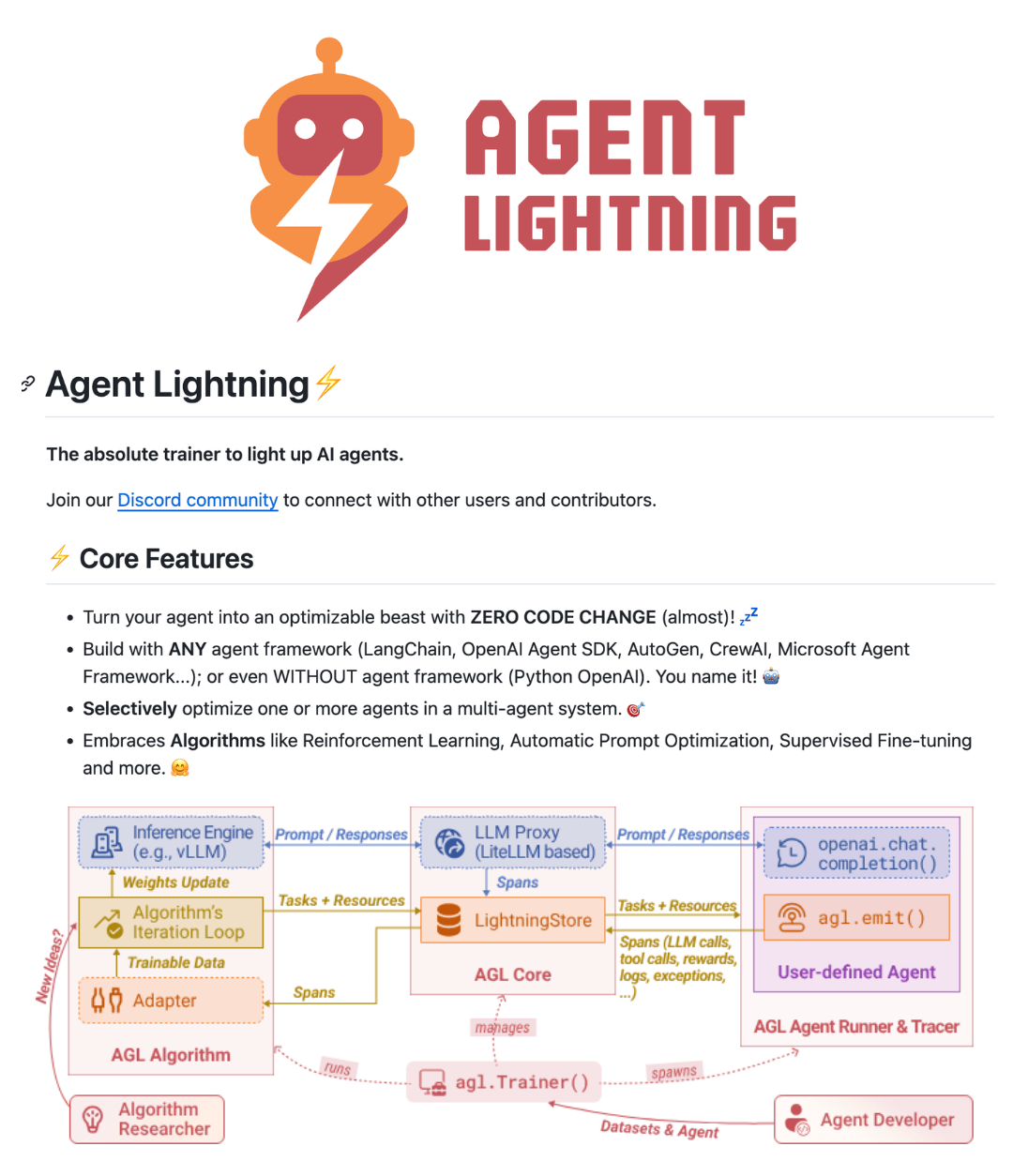

Microsoft quietly released Agent Lightning, an open-source framework that trains AI agents using reinforcement learning with almost ZERO changes to your existing code.

Your AI agent workflow runs normally, while this framework intercepts your agent's execution flow through lightweight instrumentation. It captures every LLM call, tool invocation, and reward signal as structured spans, then feeds this data into optimization algorithms that actually improve your agent's performance.

Agent Lightning works with any AI agent framework - LangChain, OpenAI Agent SDK, AutoGen, CrewAI, Microsoft Agent Framework...); or even without an agent framework (Python OpenAI), as it operates at the LLM interface layer.

Key Highlights:

Drop-in optimization - Add a tracer to your existing agent code and start collecting training data immediately. The system automatically instruments LLM calls and captures execution traces without requiring framework-specific rewrites.

Multiple optimization methods - Choose between reinforcement learning with VERL integration for model fine-tuning, or automatic prompt optimization for improving instructions. Swap algorithms without changing your agent code.

Independent scaling architecture - Run dozens of rollout workers on CPU machines while training happens on GPU clusters. The client-server execution strategy keeps task execution separate from model updates.

Production monitoring - Built-in logging tracks every agent decision, LLM call, and reward signal with OpenTelemetry spans. The hook system lets you add custom logic at key execution points without modifying core agent code.

The Simplest Way To Create and Launch AI Agents

Imagine if ChatGPT, Zapier, and Webflow all had a baby. That's Lindy.

With Lindy, you can build AI agents and apps in minutes simply by describing what you want in plain English.

From inbound lead qualification to AI-powered customer support and full-blown apps, Lindy has hundreds of agents that are ready to work for you 24/7/365.

Stop doing repetitive tasks manually. Let Lindy automate workflows, save time, and grow your business.

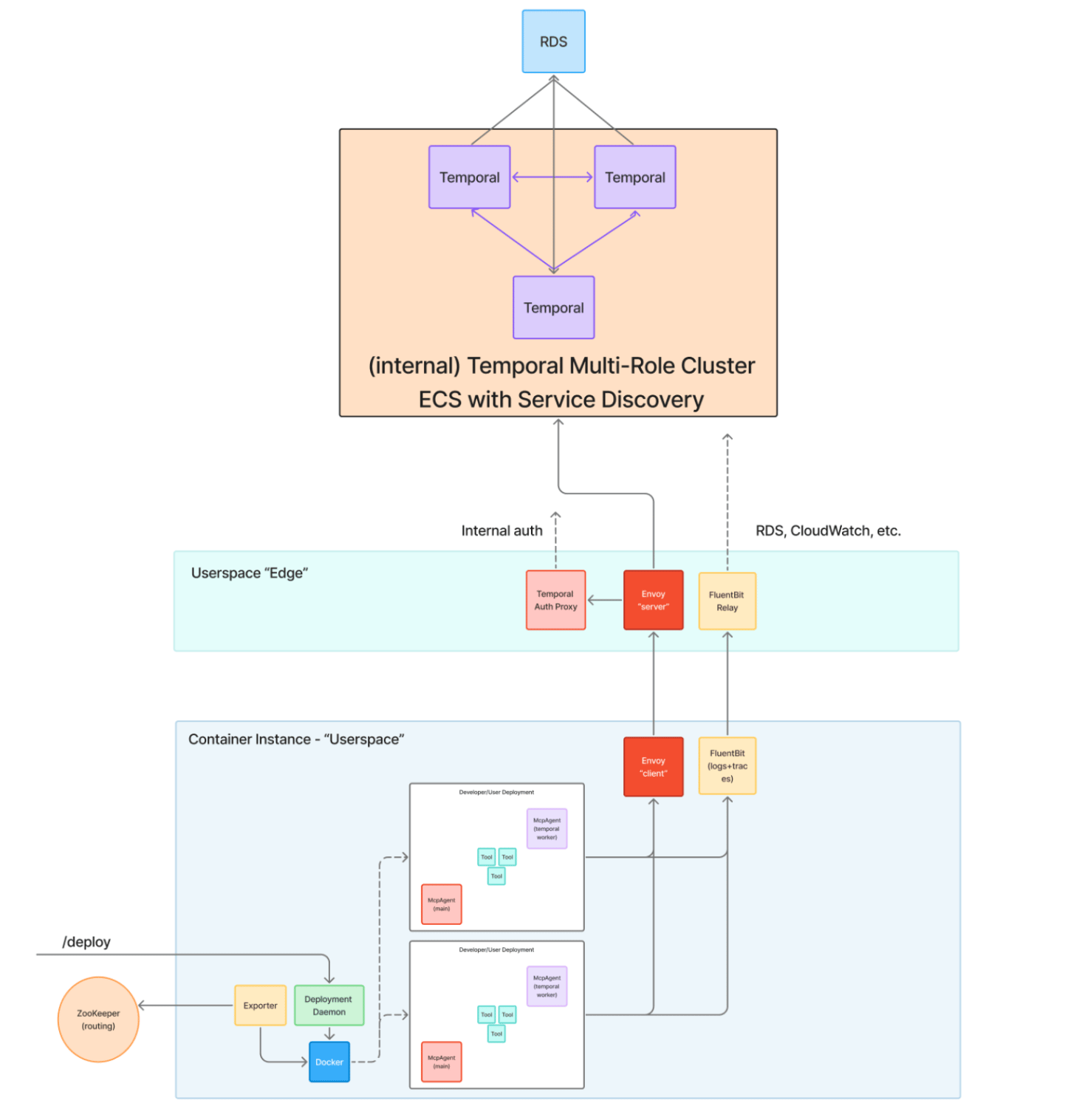

Your local MCP server can now scale to production infrastructure without changing a single line of code.

The team behind mcp-agent has released MCP-C, a cloud platform that takes your local MCP server, whether it's an agent, a ChatGPT app backend, or a custom tool API, and deploys it with five CLI commands. Everything runs as a remote SSE endpoint that implements the full MCP spec, so any MCP client can connect without code changes.

The platform uses Temporal as the runtime environment, handling pause/resume, fault tolerance, and long-running operations that agents need. Deploy with one command, and your app gets managed auth, logging, secrets handling, and durable workflow execution.

Key Highlights:

One command deployment - Run

mcp-agent deployand your local project bundles up, processes secrets, and ships to the cloud with Temporal-backed workflows. Your@app.tooland@app.async_tooldecorators work identically in production without code changes.Durable execution by default - Built on Temporal, so long-running agents can pause, resume, and recover from failures automatically. Workflows maintain state across interruptions, and you can inject human approval steps without building custom retry logic.

Standard MCP everywhere - Each deployment implements the full MCP spec including advanced features like sampling, notifications, and logging. Stdio MCP servers run as sidecar containers, and clients install with a single CLI command that configures the right headers and URLs.

Operational visibility - Stream logs with

mcp-agent cloud logger tail, forward traces to any OTLP endpoint, and monitor workflow execution. Network isolation, encrypted secrets at rest, and audit logs keep your deployments secure while the platform handles the infrastructure.

Quick Bites

Open Agentic environments to bundle tools, APIs, and permissions

Meta and Hugging Face are launching OpenEnv, a standardized spec for agentic execution environments. The idea is simple: instead of exposing agents to millions of tools unsafely, you create sandboxed agentic environments that bundle exactly what's needed - tools, APIs, credentials, context for specific tasks. These environments work across both training and deployment, so you can RL-train an agent in the same environment it'll run in production. The Hub goes live next week. You can check out some example environments here.

Build full-stack agent UIs in CLI with one command

Moving an AI agent from the terminal to a truly interactive UI is now a one-line command. CopilotKit has released AG-UI CLI that scaffolds a complete full-stack setup in seconds. Pick your agent framework (LangGraph, CrewAI, etc.) and frontend stack, and you get working examples of streaming chat, generative UI components, and bi-directional state sync out of the box. Just run npx create-ag-ui-app@latest, that’s it!

Mistral launches AI Studio for end-to-end AI operations

After watching enterprise teams get stuck at the prototype stage, Mistral just released AI Studio to close the gap between experimentation and production. The platform gives you three core pieces: full observability with custom judges and evaluations, a fault-tolerant runtime built on Temporal, and a Registry that tracks lineage across every asset in your AI stack. It's built on the same infrastructure Mistral uses internally to serve millions of users, now packaged for teams that need real governance and observability. Currently in private beta.

Tools of the Trade

AIO Sandbox - An all-in-one agent sandbox environment that combines Browser, Shell, File, MCP operations, and VSCode Server in a single Docker container. Built on cloud-native lightweight sandbox technology, it provides a unified, secure execution environment for AI agents and developers.

xyflow - Open-source React and Svelte libraries for building node-based interfaces and interactive diagrams. The components come ready to use out of the box and support full customization for apps like workflow editors, data processing tools, and visual programming interfaces.

Dictly - On-device voice dictation app for macOS and iOS that transcribes speech in real-time with sub-200ms latency and inserts formatted text directly where your cursor is. You can customize processing pipelines for text cleanup, formatting, and tone adjustment, all running locally. Has a free tier too.

Awesome LLM Apps - A curated collection of LLM apps with RAG, AI Agents, multi-agent teams, MCP, voice agents, and more. The apps use models from OpenAI, Anthropic, Google, and open-source models like DeepSeek, Qwen, and Llama that you can run locally on your computer.

(Now accepting GitHub sponsorships)

Hot Takes

Why spend time releasing yet another browser or an n8n clone when you could be on the brink of reaching AGI/ASI and making everything irrelevant?

Unless...

~ Santiago

Software engineers should be evaluated by the number of lines of code they don’t write.

That’s all for today! See you tomorrow with more such AI-filled content.

Don’t forget to share this newsletter on your social channels and tag Unwind AI to support us!

PS: We curate this AI newsletter every day for FREE, your support is what keeps us going. If you find value in what you read, share it with at least one, two (or 20) of your friends 😉

Reply